Table of Contents

Let’s review the areas of your enterprise in which AI-ML applications can be beneficial. AI-ML generally help with identifying subtle patterns in large data. Additionally, if there are repetitive tasks, it is likely that they can be automated with the help of AI and/or robotics; but of course, there will always be tasks that are hard to automate even with AI. When using AI-ML applications, we believe four main areas within a typical business would likely benefit the most: Operations, Customer Engagement, Talent Management, and Financial Management/Risk Management.

For operations, data can identify areas of inefficiency and offer solutions. For example, if your business sells goods, AI can help you track your inventory, forecast demand, and adjust the orders. More and more, customers expect a smooth and pain-free experience for every transaction. Part of that experience is your store ideally having every item in stock.

Additionally, recommendation systems (such as those used by Amazon) can help in keeping customers coming back. AI also has the potential to transform onboarding and talent retention. AI can cater to an individualized onboarding experience and offer incentives to retain your talent. In the finance department, AI can help identify fraudulent transactions or quickly identify transaction errors. The latter has the additional benefit of increasing customer experience. There are situations in which analytics, not necessarily AI, would help your business make informed decisions. One of the simplest examples is experimentation involving A/B Testing to determine which version of your product would be most likely to succeed upon release. These analytics can be uncovered using visualizations and/or statistical models.

The application of data science, ML, and AI is vast. Listing details about these applications is outside the scope of this article. However, we can offer some numbers that may motivate your company to incorporate a data science practice.

According to the McKinsey Global Survey, 79% of 1,843 participants attributed AI as part of their business cost reduction in 2020 [1].

Across the globe, AI adoption in at least one portion of the business has increased to 57% compared to 50% in the previous year. Out of those companies with AI application(s) with successful cost reduction, 30% of them saw more than 20% expense reduction. As a side note, the 2019 to 2022 AI adoption increase is sharper in emerging economies such as China. We also believe that analytics and data reporting will likely uncover insights for the inefficiencies in your processes. Investing in the data infrastructure and data analysts would bring in a faster return on your investment. In fact, they build a nice stage for ML and AI practice by providing a platform and domain expertise. If your company does not have talent in data infrastructure and analytics, the data science team might be unreasonably burdened.

We have been helping small to medium sized enterprises as well as large enterprises in their digital transformation journey for about 20 years. We have worked with over 400 companies from various domains, such as multimedia, finance, and healthcare. In our journey, we have learned practices that result in revenue, and we have also seen practices that do not see the light beyond exploratory work. Very few companies today have been able to reap the real benefits of data and AI. If you are now convinced that you want your business to make data-backed informed decisions or incorporate AI as part of the practice, in this paper, we will be providing some guidelines as to what to keep in mind when starting your practice. We hope that these guidelines will help in the successful incorporation of ML & AI practice resulting in organizational efficiency and business/revenue growth.

In this paper, we discuss the roles of three organizational entities that play key roles in data science practice:

(1) data science, data engineering and machine learning team

(2)domain experts and analysts who have knowledge of the business and day to day activities,

(3) business unit comprises of stakeholders who oversee roadmaps, prioritize the projects, allocate the resources, and take care of process bottlenecks. We stress the roles of domain experts and stakeholders in a productive data science practice.

These entities are involved in overseeing five critical elements of the practice:

- Collaboration between data science and business stakeholders

- Setting achievable goals with measurable impact

- Domain knowledge

- Data availability and quality

- Infrastructure

In the following sections, we discuss these points and our industry experience. We conclude this paper with our outlook moving forward.

Business units and data science teams in small and medium-sized enterprises often work in silos. The business decision-makers do not know the data science capabilities and what kind of projects can be executed to help the business. On the other hand, data science teams may not be fully aware of the business problems and their impact. If your business does not already have a fluid communication between the two units, it is imperative to bridge this gap.

We have found that knowledge transfer between the teams results in a better outcome. Involving all teams in the discussions can create a constructive environment in which its product is magnificent. We understand that making these communications may be difficult. However, a willingness and patience to learn from each other are critical to the success of a data science project.

In our interactions with various clients, we come across a wide range of communication and interaction levels. Many companies have a clear demarcation of business and technical units. Some companies have better communication between these two units. We had an experience with a client whose level of communication and involvement left us impressed and with a more than successful outcome.

A Bluetooth-enabled glucometer manufacturer in India approached us to add diabetes advice functionality to their chatbot. The chatbot was there to help customers select a product. To us, this requirement seemed odd at first, so we talked to their project managers. They explained that some patients who bought the glucometers do not understand the meaning of the glucometer readings and seek advice on what to do, given their results.

Our client wanted to help these patients in order to build a brand with diabetes expertise. When they provided us with about 50 previously recorded conversations between medical representatives and patients, we told them that we would need more conversations to properly train their chatbot.

The product managers then started asking questions about how chatbots are trained. The company had access to doctors, dietitians, and health experts, so they suggested that we work more closely with them. Together with these experts, we developed a simulated dataset that covered common diabetic problems in addition to identifying medically critical cases from each generated scenario. We were amazed by their cooperation level and the understanding they gained during the project.

On the other hand, our team learned medical details about diabetes, e.g. type 1 and type 2. The project was successful due to their vision and collaboration, which resulted in a spinoff company that helps diabetic patients manage their health.

In a business-centric approach, we start with stakeholders and domain experts to identify problems with high business impact without worrying about the data and algorithms. Together with client’s domain experts and data scientists, we can identify the data, associated challenges, and line of attack to achieve the goals. The projects with a business-centric approach get proper priority, funding, resources, visibility, and project structure. Their measurable impact and attention result in higher chances of success. Even if they fail, they fail fast, freeing up resources for other impactful projects, as desired in an industrial setting.

To give you an example, we worked with a client on various marketing strategies for obtaining leads and journey from prospects to paying customers. Although solutions like Salesforce Einstein provide similar analytics, they aimed to take control of the process and apply advanced methods. Prospect pipeline prioritization is not a well-published topic in literature and the web. This problem was nonintuitive and difficult to solve, however it had a significant business impact. Eventually, working together with marketing experts and data scientists we found solutions in a mix of customer churning, survival analysis, and probabilistic modeling. They gathered relevant data and successfully applied probabilistic models to rank prospects by likelihood of becoming paying customers.

In a data-centric approach, we showcase data science capabilities by making the best use of the available data. The projects of this scheme are usually exploratory, largely leveraging published art, work of open-source communities and academic institutions. Often this exploration becomes indefinite due to new research in AI, new data, or a change of approach by data scientists. Even if projects are successful, they have lower chances of going to the next stage due to misalignment with company priorities. This approach is prevalent today, and we find it partly responsible for the negative impression of data science not contributing to the revenue.

We come across organizations that follow this approach, especially with new data science teams having little business knowledge. Data creation and warehousing make it appealing to explore the data following this approach. For example, the manufacturing industry records a lot of sensor data. Web analytics provides large amounts of data that might look appealing to start data-centric projects. We usually work with the business to narrow the scope of the project and encourage these teams to find business impact first.

When working on scoping projects with clients (again, the emphasis here is communication), we work on setting goals and metrics regardless of the approach we are taking. Sometimes clients approach us with over-ambitious goals, which might look like a good challenge for ML. However, upon closer inspection, the project requires more data and development time than what the client is willing to spend.

For example, we were working with a media company that wanted an audio processing program for identifying profanity in movies.

- They asked us to develop a speech-to-text system that can correctly convert any audio to text.

- We agreed that we could develop such a program; spelling correctly is a challenging task that is not necessary for this application.

- We also added that speech-to-text solutions exist already, but they are not universal and mainly work in conversation settings.

- Because of the broad nature of the application, using this as an underlying model would likely become computationally costly.

- We advised that a spoken language understanding solution has shown improved precision and lower false alarms for a limited vocabulary such as profanity, for example, the work of Lugosch et al at Facebook [2].

Data Science, ML, and AI applications do not exist without domain experts. They infuse their domain and business knowledge in ML models by:

(a) setting the model evaluation metrics

(b) assigning weights to various errors and

(c) curating test data and overseeing the evaluation scheme.

Any model produces errors, whether it be because of biased data, wrong assumptions, etc. Domain experts’ perspective (and diverse perspective) makes data science useful and relevant to the businesses. These errors have different impacts on processes and the final product. The ingrained knowledge from domain experts, as well as the diverse perspective, can make an impact on how successful the result is. These partnerships help us identify these errors, assign their relative weights, and develop business-oriented evaluation metrics. After these error weights are identified, we either incorporate the error correction into the ML model or develop a post-processing correction routine. We further emphasize the importance of domain expertise in testing and evaluations in the coming sections.

In a classification setting, there are two types of errors that a model can make: false positive and false negative [3]. False positive is like calling a good product defective, whereas false negative is giving QC approval to a defective product. Without domain knowledge and business rules, data scientists tend to assign equal weights to both types of errors. Setting these weights without domain insights can be harmful to the business.

For example, we were helping a client develop a product inspection model for solar cells. The developed solution would reject only when the model was absolutely sure of defects and would pass in case of doubt. We developed these rules of error weights by manufacturing engineers’ guidance to bring balance to discard defective products while keeping the scrap rate low. In some scenarios, domain experts might suggest more conservative error weights; for example, the model should not approve a loan for a marginal credit score. Working with domain experts to develop error weights and model evaluation metrics is the key to the solution with built-in business insights [4].

A lot of data is being produced every second now. However, often, there is a scarcity of useful data that is ready for data science work. Most enterprises these days have systems that generate the data and store that data. A common argument for storing all collected data is that it may become useful someday. In these situations, companies are afraid of throwing away data and end up spending a fortune on storage. We do admit that it is impossible to foresee and know the best data to store. However, we have enough experience to understand common data usage and the best data architecture practices to save cost. Ultimately, your business is in control of what you would want to keep. We collaborate with your organization to come up with the best-catered solution.

In most data science, ML, or AI applications, we cannot use the collected raw data. Therefore, despite the over-abundance of data, this raw data would have to be transformed to become useful. The relevant data is often unstructured and unlabeled, which is not what is needed for data modeling. These transformations can be painstakingly time-consuming and labor-intensive. Once the data is properly transformed and curated, useful data may become scarce. There are multiple ways to deal with this lack of data. We will introduce two common techniques, transfer learning and augmentation, specific to ML, that help alleviate the data scarcity.

Another commonly used technique to compensate for the lack of data is data augmentation [5]. Data augmentation pertains to creating new instances of data from existing ones. For example, if we have an image of a dog and flip it left to right, it is still an image of a dog. A machine learning model, however, sees this as a new data point instead of the same picture rotated. Other examples of image augmentations include the addition of noise, lighting, or a change in focus. Audio augmentation involves changing the frequency, loudness, pitch, and spec augmentation [6]. Previously, we have worked on developing new augmentation methods to cater to a specific need.

For example, we previously worked on developing an audio model to detect gunshots in movies for a media company.

We found that Google’s Yamnet model can differentiate over 500 types of sounds, including gunshots [7].

Because of how the Yammet model is designed, if a gunshot sound is split between two consecutive frames of 500 ms, the model may fail to detect it.

We wanted to make a customization so that no gunshots are missed.

Fortunately for us, Google released the data used to train the Yamnet model [8].

However, we are cautious when using third-party data because we were not involved in the generation of these embeddings (including labeling).

We do not know what kind of biases the data have and what kind of effect these biases would have on the model.

Our goal is to detect gunshots in movies, but that was not the main goal of the Yamnet model.

In order to further understand the underlying data, we listened to several movies and TV shows from the customer database and compared them with the Google dataset.

We noticed several differences:

> The YouTube videos involved people with real gunshots in open spaces, whereas movie gunshots resemble audio in closed spaces like hotel rooms, banks, etc.

> Most YouTube clips were recorded using a cell phone whose capabilities do not record the full spectrum of audio frequencies used in movies.

> Many YouTube files contain videogame recordings that are clearly different from those in movies.

> Most movie scenes contain some background music that is not present in YouTube clips.From these learnings, we carefully curated an audio dataset by removing irrelevant audio clips.

Further, we produced augmented audio data by mixing YouTube clips with movie clips with a range of audio energies.

For testing the models, we only used our client’s data by painstakingly annotating every gunshot from a few movies in each genre.

Sometimes, there is really no way to compensate for the lack of data. Therefore, we recommend being intentional about the type of data you collect. The format in which data is stored is also important. For example, we sometimes encounter data stored in an aggregate form instead of the original raw data. Aggregation is a perfect format for databases, but in data lakes, generally, raw data is stored; this aggregation format may lose value when we want to do some analysis. We have run into a similar problem as this before. Our client kept information on their products’ descriptions (e.g., their product price, items in stock, warehouse location, etc.).

Some of this information was also client-facing, so they took extreme due diligence to keep the database up to date and accurate. However, every time a change was made to the product information, the database was overwritten. When they wanted to look at historical data, they were not set up to do so. Fortunately, our client kept a log of when any change was made to entries in the database. We were able to use that log along with the current database to recreate historical data and started a process to set up a separate database for data science work without affecting the production database.

In general, three different datasets are used for developing an ML solution: training data, validation data, and test data [9]. Validation data is used to tune the models. Although a model is not trained on the validation data, during the iterative process of tuning, information from this validation set is indirectly leaked into the model.

The test data should be kept separate and used only to determine the performance of the model. It should be used only a few times for this testing purpose only. Some organizations test their models very rigorously. They involve domain experts and business leaders to create and curate the test data and keep it away from the data science team.

The transfer learning and augmentation methods are not used for test data. If the model is not satisfactory, the detailed test results are not shared with the data science team. Irrespective of whether model development is done in-house or outsourced, every organization should have well-curated test data and a way to evaluate the models. This cannot be done without the involvement of domain experts and business leaders. We have seen models that do not get deployed because business and domain experts are not convinced by the results and data provided by the ML team.

- Two popular data management schemes are data warehouses and data lakes. Data warehouse follows the extract, transform and load (ETL) structure, whereas data lakes follow the extracted load and transform (ELT) structure.

- In a data warehouse, we know how data would be used, so we transform it before saving it. On the other hand, data lakes contain a variety of data that needs to be transformed based on the need.

- With data storage solutions becoming more affordable, many companies started to adopt having a data lake and ELT structure. With the ELT format, the process of transforming data and storing it in a database generates the necessity of a new role that is not quite a Data Engineer and not quite a Data Analyst.

- These days, we hear the term Analytics Engineer to describe this role. By the way, this naming convention is by no means consistent across the industry. Their main task is to generate pipelines that would transform the data to maintain an easy-to-access data warehouse.

- Having the pipelines automated saves time for analysts who are often working on making dashboards for data reporting. Having one central data warehouse has the added benefit of making data reporting consistent across your enterprise and minimizing confusion.

- Data scientists can also benefit from having these formats in the same way that analysts do. They can have a consistent data source and concentrate on making statistical models or ML models. There is always going to be a need for custom transformations, but by having centralized transformation pipelines, this need may be reduced. We describe a generic architecture of the data lake in Appendix B.

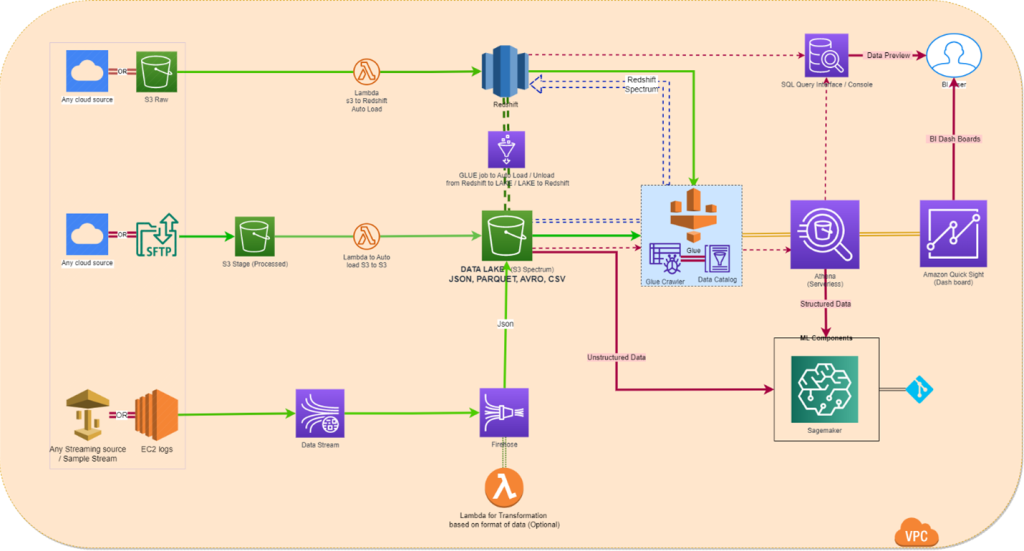

This data engineering part of solution has been designed for customers with consideration of simultaneous data access by data science and business intelligence (BI) team.

The data comes from multiple sources:

- Data ingestion from Redshift

- Data ingestion from S3

- Data ingestion from streaming sources.

As shown in below figure, various Amazon services have been used. Amazon redshift – a fully managed, petabyte-scale data warehouse service in the cloud. Amazon S3 – an object storage service that stores data as objects within buckets. Amazon Kinesis Data Streams – a serverless streaming data service that makes it easy to capture, process, and store data streams at any scale. Amazon Kinesis Data Firehose is an extract, transform, and load (ETL) service that reliably captures, transforms, and delivers streaming data to data lakes, data stores, and analytics services. Lambda is a compute service that lets you build applications that respond quickly to new information and events. Amazon SageMaker – a fully managed service that provides every developer and data scientist with the ability to build, train, and deploy ML models quickly, and Amazon Kinesis, which helps with real-time data ingestion at scale.

The diagram depicts the solution architecture, enables real-time data ingestion from either an External or any cloud platforms, and real-time data storage on a data lake. This functionality is specifically tailored for situations where there is a need for storing and organizing large amounts of real-time data on a data lake and direct access from lake without being loaded.

In this module, data is ingested from either any cloud or external source. The workflow is as follows:

- Data source for the redshift will be assumed as any cloud data sources, the processed or transformed data from redshift cluster can be directly accessible using SQL supported tools.

- Data source for s3 also will be assumed as any cloud or external based, here the data incoming treated as structed or unstructured, the structed data can optionally loaded into an Amazon Redshift cluster or it can be directly accessible without being loaded to Redshift cluster, this can be done by Redshift Spectrum, which is a feature of Amazon Redshift that allows you to query data stored on Amazon S3 directly and supports nested data types.

- The streaming data will be coming from an external source to kinesis data stream, which is a massively scalable and durable real-time data streaming service. Then this data feed the Firehose with help of lambda, streaming data reads by lambda, and it will perform the tasks like encryption, compression based on architecture needs.

- Streaming data is then automatically consumed by Amazon Kinesis Data Firehose. Kinesis Data Firehose loads streaming data into data lakes, data stores, and analytics services. It is a fully managed service that automatically scales to match the throughput of your data and requires no ongoing administration. Data captured by this service can be transformed and stored into an S3 bucket as an intermediate process.

- The stream of data in the S3 bucket can optionally loaded into an Amazon Redshift cluster using s3 copy and stored in a database or the stream of data in S3 bucket can be directly accessible through Redshift spectrum without being loaded to Redshift cluster.

- Data from all 3 channels can be directly accessible through redshift / redshift spectrum by BI users, Same cab be unloaded to S3 lake from redshift cluster and Intermediate buckets, the lake will be the final consuming point of data for ML models.

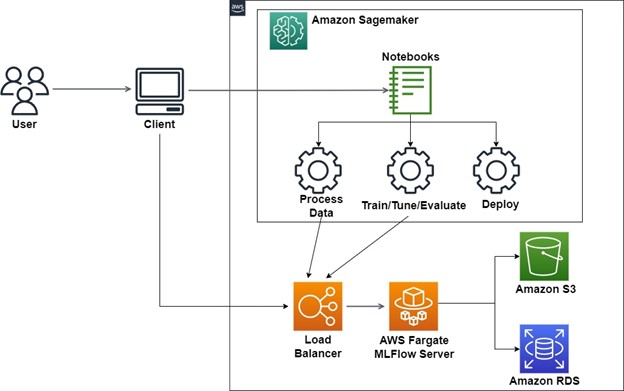

In earlier section, we discussed different architectures for ingestion of data from cloud or external data source to data lake S3 bucket and creating a data pipeline. This data lake becomes the final consuming point of data for ML models. During different phases of an ML project, data scientists need to track multiple experiments, manage different model versions going to production and their lifecycle to find solution to the business need. The open-source platform MLFlow helps manage the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry. It includes the following components:

- Tracking – Record and query experiments: code, data, configuration, and results

- Projects – Package data science code in a format to reproduce runs on any platform

- Models – Deploy ML models in diverse serving environments

- Registry – Store, annotate, discover, and manage models in a central repository

MLFlow can be deployed on AWS Fargate and used in the ML project along with Amazon SageMaker. SageMaker is used to develop, train, tune, and deploy an ML model quickly, removing the heavy lifting from each step of the ML process.

In suggested architecture described in Figure 2, a central remote MLFlow tracking server is used to manage experiments and models collaboratively. This MLFlow server is dockerized and hosted on AWS Fargate. Amazon S3 and Amazon RDS for MySQL are set as artifact and backend stores, respectively. The artifact store is suitable for large data (such as an S3 bucket or shared NFS file system) and is where data science team log their artifact output (for example, models). The backend store is where MLflow Tracking Server stores experiments and runs metadata, as well as parameters, metrics, and tags for runs.

We can use databases such as MySQL, SQLite, and PostgreSQL as a backend store with MLflow. For this example, we set up an RDS for MySQL instance. Amazon Aurora is another MySQL and PostgreSQL-compatible relational database which can also be used for this. Post launching the MLflow server on Fargate, now the remote MLflow tracking server is running and is accessible through a REST API via the load balancer URI. MLFlow Tracking API can be used to log parameters, metrics, and models when running the ML project with SageMaker.

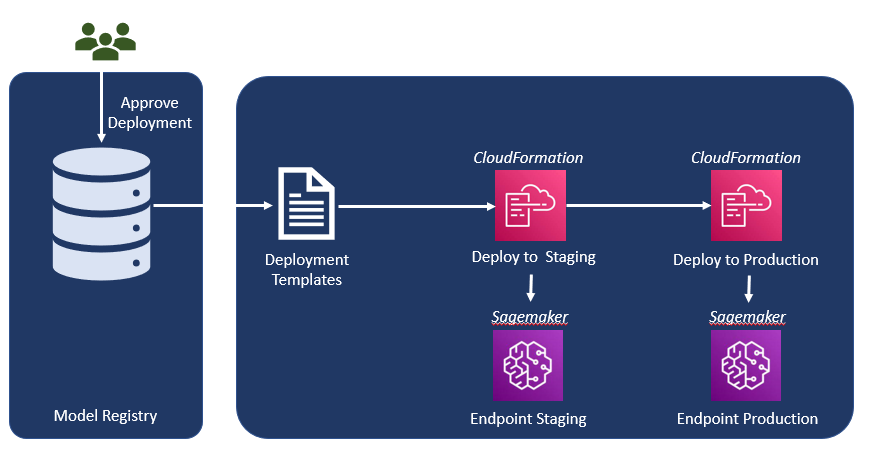

Models trained in SageMaker are registered in the MLflow Model Registry as shown in Figure 3. As a result, the pipeline will register a new model version in MLflow at each execution. Finally, the MLflow model is deployed into a SageMaker endpoint. Here SageMaker MLOps project and the MLflow model registry were combinedly used to automate an end-to-end ML lifecycle.

- The State of AI in 2021. Chui, M et. al. McKinsey Global Survey. Dec 2021. Online. https://www.mckinsey.com/business-functions/quantumblack/our-insights/global-survey-the-state-of-ai-in-2021

- Loren Lugosch, Mirco Ravanelli, Patrick Ignoto, Vikrant Singh Tomar, and Yoshua Bengio, “Speech Model Pre-training for End-to-End Spoken Language Understanding”, Interspeech 2019.

- Francois Chollet, Deep Learning with Python, Manning Publication CT, 2017.

- Gron Aurlien, Hands-on Machine Learning with Scikit-Learn, Keras and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems. 2nd ed., O’Reilly 2019.

- Connor Shorten, Taghi Khoahgoftaar, A survey on Image Data Augmentation for Deep Learning, Journal of Big Data, 60, 2019.

- Daniel S Park et al, SpecAugment: A Simple Data Augmentation Method for Automatic Speech Recognition, Proc. Interspeech 2613-2617, 2019.

- M. Plakal and D. Ellis, “Yamnet,” September 2022. [Online]. Available: https://github.com/tensorflow/models/tree/master/research/audioset/yamnet

- J. F. Gemmeke et al., “Audio Set: An ontology and human-labeled dataset for audio events,” 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 776-780, 2017.

- Andrew Ng, Machine Learning Yearning, Technical Strategy for AI Engineers, In the Era of Deep Learning, September 2022 [Online]. Available https://github.com/ajaymache/machine-learning-yearning

- Javier Andreu-Perez et al, Big data for health, IEEE Journal of Biomedical and Health Informatics 19(4), 2015.

Author

-

admin