Dancing with Algorithms: Why Human

Touch Remains the Secret Sauce in

AI-Powered Requirements Engineering

Exploring the balance of AI automation and human expertise across the full requirements lifecycle.

Executive Summary

Requirements are the foundation of every successful software product—yet they are also one of the most fragile points in the delivery lifecycle. Misunderstood intent, vague language, and missing context lead to wasted effort, missed market windows, and frustrated stakeholders.

AI and Large Language Models (LLMs) promise a step change in how we handle requirements: summarizing workshops, drafting specifications, analyzing ambiguity, and generating acceptance criteria at a speed no human can match. But there is a catch. AI brings incredible processing power without experience, empathy, or accountability.

This whitepaper explores how Product Managers, Delivery Leaders, and CTOs can safely harness AI-powered requirements engineering—protecting quality while unlocking speed, consistency, and better alignment with business goals.

00

1. The Hard Truth About Software Requirements

Why Requirements Still Make or Break Products

Let’s face it – requirements are the foundation that can make or break your product. Yet, projects still crash and burn because someone misunderstood what the client actually needed. Requirements engineering has remained stubbornly human-centric for decades, and for good reason. It’s messy, ambiguous, and deeply political.

Requirements professionals must navigate stakeholder expectations, technical constraints, regulatory obligations, and market realities—often with incomplete or conflicting information. Phrases like “the system should be intuitive” hide a world of assumptions that only careful questioning and deep empathy can uncover.

The Business Impact of Getting It Wrong

For Product Managers, a poorly captured requirement can mean missed market opportunities. For Delivery Leaders and CTOs, it translates to unpredictable timelines, rework, and budget overruns. Entire teams can end up building elegant functionality that doesn’t actually solve the right problem.

Against this backdrop, the rise of AI and LLMs feels both exciting and unsettling. Can machines really help with something as nuanced and human as requirements?

00

2. When AI Meets Requirements: The Perfect Storm of Opportunity and Risk

Where AI Naturally Fits in Requirements Engineering

AI tools are fundamentally information processors—and requirements engineering is largely about processing and structuring information. Consider a typical day for a Business Analyst or Product Owner:

Drowning in transcripts of stakeholder interviews

Sifting through endless user feedback and survey results

Drafting repetitive user stories, use cases, and specifications

Checking requirements for clarity, consistency, and ambiguity

Maintaining traceability across documents and artifacts

AI can assist across all of these tasks: summarizing meetings, extracting key points, analyzing language for ambiguity, drafting acceptance criteria, and suggesting traceability links. For leaders, this looks like a holy grail—reduced documentation time, less manual drudgery, and more consistent artifacts.

“AI in requirements is like giving a Formula 1 car to someone who just got their learner’s permit. The potential power is incredible, but without proper guidance, you’re headed straight for the wall.”

The Limits of Pure Automation

Yet, AI lacks the ingredients that make experienced analysts effective: deep domain knowledge, stakeholder empathy, strategic understanding, and nuanced judgment. In practice, it behaves like an incredibly fast junior team member that needs constant supervision.

This is where two pillars become non-negotiable for safe AI use in requirements: human oversight and prompt engineering. Ignore either and you are effectively building your product on digital quicksand.

00

3. Why Your AI Needs Adult Supervision

The Hallucination Horror Show

AI systems can confidently generate content that sounds legitimate but is entirely fabricated. Imagine you provide an AI with a transcript of customer feedback for your B2B platform:

Your prompt: “Extract specific feature requests from this transcript.”

Transcript: “Users in finance need to export reports in CSV and Excel formats, with the ability to schedule these exports.”

AI response:

Export reports in CSV and Excel

Scheduled report delivery

Integration with QuickBooks

That last item was never mentioned. Without human validation, hallucinated “requirements” can slip into the backlog, diverting teams into building features nobody actually asked for.

The Context Catastrophe

AI doesn’t inherently understand your business strategy, target segment, or regulatory environment. When you ask for “best-practice onboarding requirements,” it may respond with generic steps that ignore critical facts—like your users being non-technical seniors, or your need to meet strict KYC/AML regulations.

The Bias Blind Spot & Empathy Vacuum

AI models learn from large datasets that reflect societal bias. Unchecked, they can generate requirements or design directions that exclude or disadvantage certain user groups. And while AI can analyze text, it doesn’t feel user frustration, anxiety, or delight. Requirements work often hinges on recognizing these emotional drivers.

The Irreplaceable Human Role

In this environment, human professionals are not optional—they’re essential. They are:

Validators: Comparing AI output against stakeholder intent and source material.

Contextualizers: Applying domain and business strategy to refine requirements.

Ethical Guardians: Identifying bias and ensuring inclusive design rates.

Nuance Decoders: Interpreting the “unsaid” in user feedback and stakeholder comments.

Decision Makers: Making judgment calls based on incomplete or conflicting information.

00

4. Structuring Oversight Across the Requirements Lifecycle

What Needs Oversight

Effective oversight isn’t a quick skim of AI output—it’s a structured review process embedded in every phase of the requirements lifecycle. Key dimensions include:

Factual accuracy: Does the AI output correctly reflect the source?

Completeness: Has anything important been omitted or oversimplified?

Consistency: Does it align with existing requirements and constraints?

Clarity & testability: Is the language precise enough for developers and testers?

Strategic alignment: Does it support product and business objectives?

Ethical implications: Does it introduce or reflect harmful bias?

When Oversight Must Occur

Oversight should be continuous, not an afterthought:

During elicitation: Reviewing AI-generated summaries of workshops and interviews.

During analysis: Validating AI-suggested refinements or categorizations.

During documentation: Reviewing AI-drafted requirements and user stories.

During validation: Examining AI-generated acceptance criteria.

During traceability: Verifying AI-suggested links between artifacts.

Who Should Perform Oversight

Oversight works best when distributed across roles:

Requirements professionals: BAs, Product Owners, and Analysts validate intent and completeness.

Subject Matter Experts: Confirm domain-specific accuracy.

QA Engineers: Check for testability and non-ambiguous behavior.

Development Leads: Validate technical feasibility.

Product Management: Ensure strategic alignment and prioritization.

High-performing teams treat AI output as an intelligent first draft that always passes through expert hands before it becomes part of the product.

00

5. The Art of Prompt Engineering: The 4C Framework

Speaking AI’s Language

If human oversight is the safety net, prompt engineering is the steering wheel. The quality of your prompt directly shapes the usefulness of the response. For requirements professionals, this is a core skill—not a nice-to-have.

The 4C Framework:Clarity, Context, Constraints, and Critical Instruction.

1. Clarity: Be Ultra-Specific

State exactly what you want and what it applies to.

Poor prompt: “Write requirements for login.”

Better prompt: “Generate functional requirements for the user login feature of a mobile banking application, covering successful login, incorrect password, and account lockout scenarios.”

2. Context: Provide Necessary Background

Give the AI the information it needs to understand the scope.

Example: “Using only the following transcript from the user interview dated 2025-10-26, extract all statements indicating user needs or pain points related to data entry.”

3. Constraints: Define Format and Boundaries

Example: “Present the extracted needs as a Markdown bulleted list. Each item should start with ‘User needs…’ or ‘User struggles with…’. Do not include implementation details or potential solutions.”

4. Critical Instruction: Guide the AI’s Logic

Example: “Analyze this user story and generate acceptance criteria in Gherkin format. Ensure each criterion describes a single, verifiable outcome. Identify any ambiguity in the original story that made generating criteria difficult.”

From Vague Requirement to Concrete Specification

Consider the vague requirement: “The system must ensure data integrity.”

Your prompt: “Analyze the following requirement statement for ambiguity and suggest specific, measurable ways to improve its clarity, considering different types of data integrity (e.g., data accuracy, consistency, relationships). Requirement: ‘The system must ensure data integrity.’”

AI might respond by identifying missing details and suggesting a more specific version focused on referential integrity. A human BA then applies domain knowledge, adding constraints around duplicates and mandatory fields to form a final, complete requirement.

The result: AI accelerates the path from vague intent to clear, testable specification—but humans close the gap between “plausible” and “correct.”

00

6. The Human–AI Dance: How It Works in Practice

A Collaborative Loop, Not a One-Off Interaction

Implementing AI in requirements isn’t about pushing tasks over a wall. It’s a continuous loop:

Human sets the objective (prompt).

AI generates a draft or analysis.

Human refines, corrects, and contextualizes.

Repeat as needed until the artifact is fit for purpose.

Practical Examples Across the Lifecycle

Elicitation: After a workshop, you feed the transcript to AI with the prompt, “Summarize key decisions and list specific requirements mentioned, noting areas of disagreement.” The human then validates this against their notes and identifies follow-up questions.

Analysis: You ask AI, “Analyze this requirement for clarity, completeness, consistency, and testability. Suggest alternative phrasing if needed.” The analyst reviews suggestions and decides which align with system behavior and user flows.

Documentation: You provide validated requirements and templates, asking AI to “Draft user stories in this format based on these requirements, ensuring each is concise and focuses on user value.” A human ensures nuance is captured and adds missing acceptance criteria.

Validation: AI generates Gherkin scenarios from a user story. QA engineers then validate them, add edge cases, and refine the scenarios for full coverage.

This workflow demands new skills: prompt engineering, AI evaluation, and updated methodologies that explicitly incorporate AI as a first-class participant in the RE process.

00

7. Choosing Your AI Partner & The New Requirements Professional

Not All Models Are Created Equal

Selecting the right AI solution for requirements engineering is a strategic choice. Key factors include:

Domain specificity: Is it tuned for software engineering or your industry?

Data security: Where is data processed and stored? Is it reused for training?

Integration: Can it connect to Jira, Confluence, Azure DevOps, or your ALM tools?

Explainability: Can it indicate why it produced certain outputs?

Capability set: Does it support summarization, generation, analysis, and traceability?

Cost & scalability: Will it scale with team usage and enterprise constraints?

Pilot programs, measured against specific KPIs, help validate whether a model genuinely improves RE outcomes, not just speed.

The New Requirements Professional: From Documenter to Orchestrator

AI doesn’t eliminate the role of requirements professionals—it elevates it. Their work shifts from manual transcription and formatting to orchestrating human–AI collaboration.

Deep stakeholder engagement to uncover unstated needs and constraints.

Strategic analysis linking requirements to business goals and metrics.

Designing AI workflows: where AI is used, how, and with what safeguards.

Advanced prompt engineering to consistently get high-quality outputs.

Critical validation as quality gatekeepers and ethical stewards.

For leadership, this implies investment in training and career development so that BAs, Product Owners, and Analysts can thrive as AI-augmented professionals.

00

8. Case Study: AI’s Promise vs. Reality

AI-Assisted Requirements for an E-Commerce Recommendation Engine

A large e-commerce company needed to add a complex “personalized recommendation engine” feature. The requirements work involved reviewing thousands of lines of user feedback, market analysis, and internal strategy documents.

Challenge: Manually sifting through this volume of information to extract specific requirements was slow and error-prone.

AI Application: The team used an AI tool to process the documents and extract needs and pain points related to product discovery and recommendations.

Prompt example: “Analyze these user feedback documents and extract specific needs and pain points related to product discovery and recommendations. Categorize by theme and report instances where users mentioned competitor features.”

Outcome: Speed with Caveats

AI produced structured lists of feedback categorized by theme, including competitor references. However, human oversight revealed:

Misinterpretations of slang and sarcasm as literal requirements.

Confusion between user observations and actual needs.

Ambiguous categorizations that required domain insight to correct.

The team reduced initial feedback synthesis time by roughly 40%, but human review and refinement still accounted for about 60% of the total effort—critical to ensuring that requirements truly reflected user needs and business priorities.

9. Looking Ahead: The Future of Requirements Engineering

What Will Change—and What Won’t

AI capabilities will continue to improve: better context handling, deeper domain adaptation, tighter integration with RE and ALM tools, and more robust analytical capabilities. More of the “mechanical” work of requirements engineering will be handled by machines.

But core human strengths—judgment, empathy, strategic thinking, and interpersonal negotiation—remain irreplaceable. Requirements are ultimately about what users truly need, not just what they say. That gap can’t be bridged by probability alone.

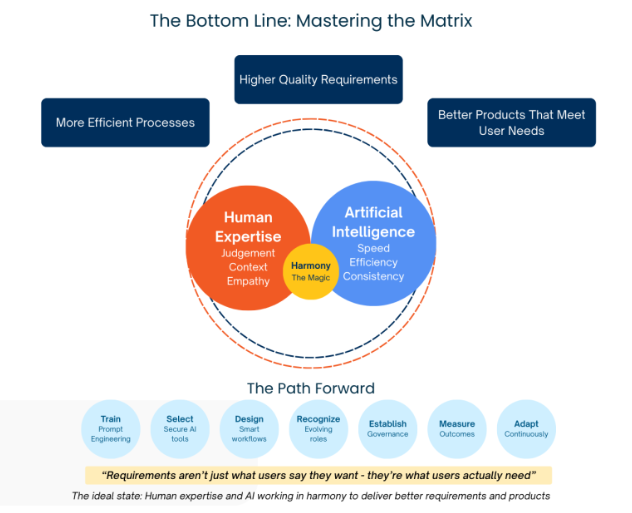

The Bottom Line: Mastering the Matrix

AI offers tremendous potential in requirements engineering: faster cycles, richer analysis, and more consistent documentation. For Product Managers, CTOs, and Delivery Leaders, the prize is clear—better requirements, fewer surprises, and products that align more closely with real-world needs.

Core Principle: The real magic happens when human expertise and artificial intelligence work in harmony—technology serving human goals, not the other way around.

The Path Forward

Train RE professionals in prompt engineering and AI validation.

Select secure, explainable AI tools that integrate with existing systems.

Design workflows that embed human oversight at critical decision points.

Recognize and support the evolving role of requirements professionals.

Cloud Establish clear governance around AI usage and ethics.

Because at the end of the day, requirements aren’t just about what users say they want—they’re about what they actually need. And that distinction will always require something uniquely human: judgment.

Ready to Explore AI-Powered Requirements Engineering?

V2Solutions helps product and technology teams design human-centered, AI-augmented requirements workflows—combining automation with deep domain expertise to improve speed, quality, and business alignment.

00

Author’s Profile

Sagar Thorat

Principal Architect, V2Solutions Sagar leads enterprise architecture and digital platform initiatives at V2Solutions, helping organizations modernize legacy estates, adopt composable and cloud-native patterns, and accelerate delivery of high-impact digital products.