Improving Requirement Quality and Consistency Through AI-Based Validation

Executive Summary

Software project failures often trace back to poor-quality requirements—ambiguous, incomplete, or inconsistent directives that undermine the development process. As digital transformation accelerates, organizations must address this quality crisis in requirements engineering to reduce costs, enhance agility, and improve stakeholder satisfaction.

To overcome these challenges, a new class of tools is transforming the way requirements are validated and managed.

AI-based validation has emerged as a viable and scalable solution. Leveraging natural language processing (NLP) and machine learning (ML), it provides automated, objective, and real-time quality and consistency checks across requirement documents. By integrating seamlessly into existing workflows, AI-powered tools improve requirement maturity and reduce costly rework.

Key Benefits:

- Early detection of requirement defects

- Enhanced collaboration through standardized language

- Faster project delivery via clearer, testable requirements

Strategic Recommendations:

- Launch pilot projects to evaluate AI validation tools

- Embed AI validation into requirement authoring platforms

- Promote a culture of quality through training and governance

Defining Requirements Quality and Consistency

Before we examine how AI enables improvement, it’s important to understand what defines quality and consistency in requirements.

High-quality requirements enable teams to understand what is needed, validate it effectively, and deliver aligned outcomes. Quality and consistency are multi-dimensional constructs in the context of requirements.

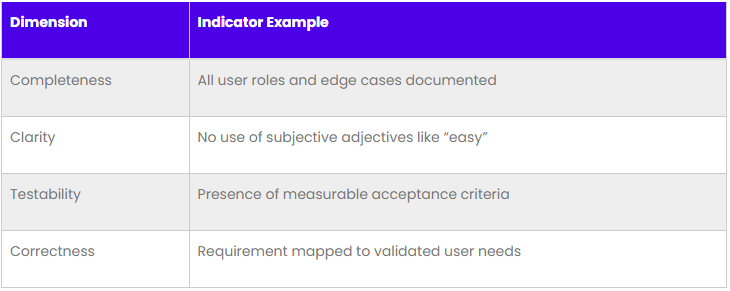

Quality Dimensions Framework:

- Completeness: Captures all stakeholder expectations, dependencies, and boundary conditions.

- Clarity: Removes ambiguity through precise language and standardized expressions.

- Testability: Enables requirements to be validated via acceptance criteria or test cases.

- Correctness: Aligns with true stakeholder needs and avoids misinterpretation.

Understanding quality alone isn’t sufficient—organizations must also tackle the often-overlooked problem of consistency.

Consistency Challenges:

- Internal Consistency: Contradictory requirements or conflicting conditions within the same document.

- Cross-functional Consistency: Divergence between the interpretations or priorities of different teams (e.g., business vs. development).

- Temporal Consistency: Requirements drifting in meaning or intent across iterations.

- Terminology Standardization: Lack of agreed-upon vocabulary results in misunderstandings.

Without an accurate assessment of the current state, it’s difficult to measure progress or justify transformation initiatives.

Consistency Challenges:

- Internal Consistency: Contradictory requirements or conflicting conditions within the same document.

- Cross-functional Consistency: Divergence between the interpretations or priorities of different teams (e.g., business vs. development).

- Temporal Consistency: Requirements drifting in meaning or intent across iterations.

- Terminology Standardization: Lack of agreed-upon vocabulary results in misunderstandings.

Without an accurate assessment of the current state, it’s difficult to measure progress or justify transformation initiatives.

Current State Assessment:

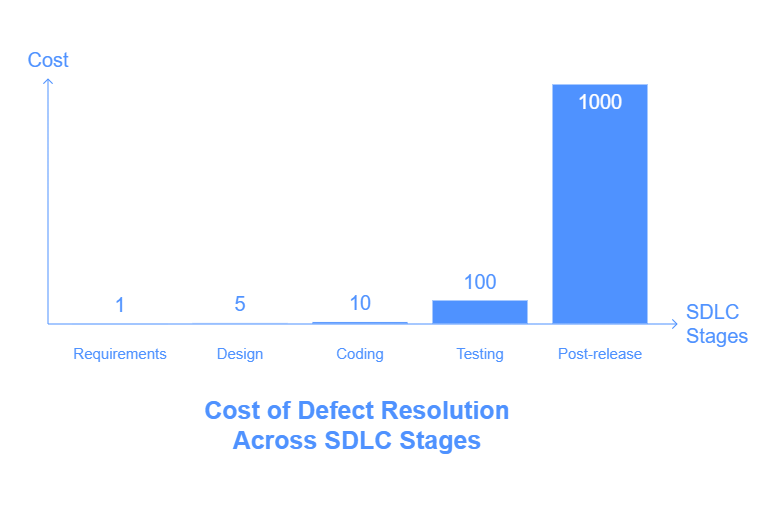

- The IEEE reports up to 50% of defects in software projects originate from poor requirements.

- According to Capers Jones, fixing defects at the requirements stage costs $1, but escalates to $1000 post-release.

- Industry surveys reveal that only 48% of organizations have a formal quality review process for requirements.

Traditional Quality Assurance Limitations

Having outlined the foundational concepts, let’s examine the limitations of traditional approaches to requirements quality assurance.

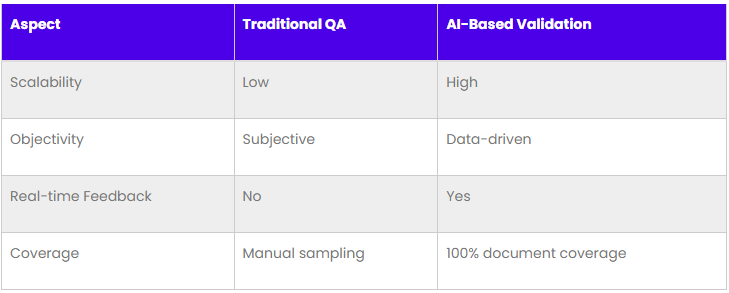

Despite formal reviews and walkthroughs, traditional QA techniques for requirements suffer from human limitations.

Manual Review Bottlenecks:

- Subjective interpretation creates inconsistent feedback.

- Reviewer fatigue leads to missed issues in lengthy documents.

- Time-consuming reviews hinder agility in iterative cycles.

These limitations become even more pronounced when consistency is factored into the equation.

Consistency Checking Challenges:

- Manual cross-referencing across hundreds of requirements is error-prone.

- Terminology drift emerges due to lack of oversight.

- Multi-team coordination introduces conflicting dependencies.

Additionally, organizations struggle to quantify the quality of requirements effectively

Quality Measurement Gaps:

- No unified metrics to assess requirement quality.

- Ad-hoc or checklist-based validation is insufficient.

- Traceability of requirement evolution and quality impact is lacking.

Table 2: Traditional QA vs. AI-Based Validation

AI-Based Validation: Core Capabilities

To address these limitations, AI-based tools provide advanced capabilities to analyze and validate requirements documents at scale and with high accuracy.

These tools combine natural language processing and machine learning to offer a comprehensive view of requirement quality and consistency.

Natural Language Processing for Quality Assessment

- Ambiguity Detection and Scoring: AI models flag vague phrases and rate them on an ambiguity scale.

- Completeness Gap Identification: NLP identifies missing roles, conditions, or linked requirements.

- Clarity Enhancement Recommendations: Suggestions are provided for simplifying or rephrasing convoluted statements.

Consistency Validation Mechanisms

- Semantic Similarity Analysis: Ensures that similar requirements express aligned meaning.

- Terminology Standardization: Flags inconsistent or conflicting terminology.

- Cross-reference Validation: Maps dependencies and ensures alignment across linked requirements.

Automated Quality Scoring:

- Multi-dimensional Quality Metrics: AI generates individual scores for completeness, clarity, testability, and correctness.

- Requirement Maturity Assessment: Tracks improvements or regressions in quality over time.

- Predictive Quality Indicators: Highlights which requirements are most likely to introduce future defects.

Technical Implementation of AI Validation

Now that we understand what AI-based validation can do, let’s explore how it works under the hood.

This section highlights the architecture, algorithms, and analysis techniques that power AI-driven quality validation systems.

AI Model Architecture

- Language Models for Semantic Understanding: Transformer-based models such as BERT or GPT extract meaning from requirement text.

- Rule-Based Systems for Structural Validation: Custom logic ensures structural completeness and syntax adherence.

- Machine Learning for Pattern Recognition: ML classifiers detect common requirement defects based on training data.

Quality Detection Algorithms:

- Incomplete Requirement Identification: Compares requirement content against templates or role-specific patterns.

- Ambiguous Language Detection: Identifies hedging words and unclear phrasing (e.g., “user-friendly,” “as needed”).

- Testability Assessment Methods: Evaluates whether requirements contain measurable outcomes or acceptance criteria.

Consistency Analysis Techniques:

- Contradiction Detection Between Requirements: Flags conflicting constraints using semantic and logical comparison.

- Terminology Mapping and Standardization: NLP models group synonymous terms and suggest standard vocabulary.

- Dependency Relationship Validation: Checks if all referenced components, use cases, or actors are defined and logically linked.

Validation Workflows and Integration

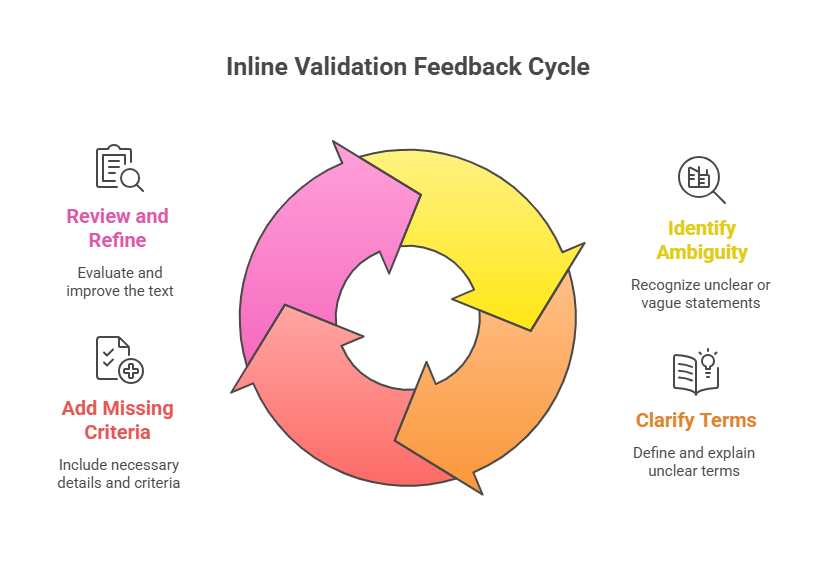

To maximize the value of AI-based validation, it’s essential to embed it within everyday requirements engineering workflows. This integration ensures continuous feedback, consistency monitoring, and alignment with existing tools.

Real-Time Quality Feedback:

Embedding AI into the requirements authoring process provides instant feedback that accelerates issue resolution.

- Inline Validation During Authoring: As requirements are written, AI suggestions appear contextually, improving clarity and structure on the spot.

- Quality Scoring Dashboards: Real-time dashboards display the quality score for each requirement and the document as a whole.

- Automated Improvement Suggestions: The system recommends better phrasing, highlights inconsistencies, and flags testability issues.

Consistency Monitoring Processes

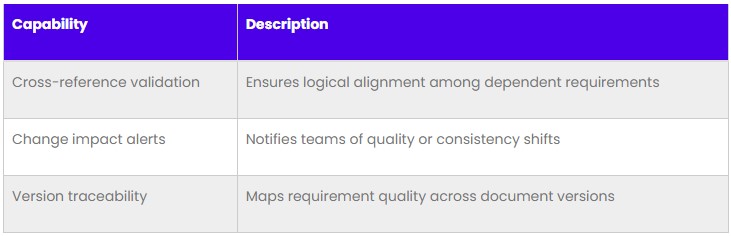

Continuous monitoring helps ensure consistency throughout the lifecycle of the requirement document.

- Continuous Cross-Reference Checking: AI engines run periodic scans to detect and alert on mismatches or dependencies that no longer hold.

- Change Impact Analysis: Whenever a requirement changes, the tool identifies affected items and highlights potential inconsistencies.

- Version Control Integration: AI validation tools track versions and changes, helping teams understand the evolution of requirement quality.

Table 3: Consistency Monitoring Capabilities

Integration with Existing Tools:

For adoption to succeed, AI validation must connect with tools already in use by engineering and product teams.

- Requirements Management System APIs: Integration with tools like IBM DOORS, Jama, or Jira enables direct validation from within the authoring environment.

- Workflow Automation Triggers: AI validation results can trigger alerts, reviews, or approval workflows automatically.

- Reporting and Analytics Integration: Dashboards can be embedded in BI tools to track requirement quality trends across projects and teams.

Quality Metrics and Measurement

To continuously improve requirements quality, organizations must adopt a quantifiable and repeatable measurement approach. AI-based validation enables objective scoring and trend analysis, transforming quality management from an abstract concept into a measurable discipline.

Quality Scoring Frameworks:

AI systems assess each requirement across multiple dimensions and roll them into composite indices that reflect document-level health.

- Composite Quality Indices: Aggregated scores based on clarity, completeness, testability, and correctness.

- Dimensional Scoring: Each requirement receives sub-scores that pinpoint the specific dimension requiring improvement.

- Trend Analysis and Improvement Tracking: Historical data highlights improvement over iterations or regression in quality due to requirement changes.

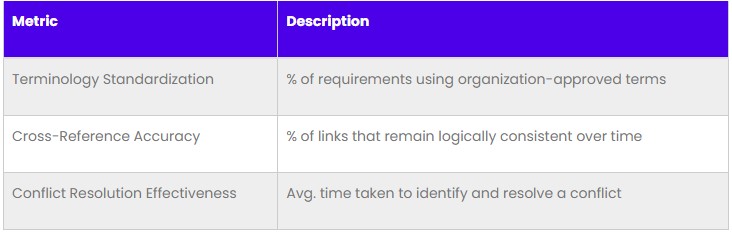

Consistency Metrics:

Consistency isn’t binary; it can be scored and benchmarked. AI-based validation quantifies consistency across documents and teams.

- Terminology Standardization Rates: Measures use of preferred vs. deprecated terms across the requirement set.

- Cross-Reference Accuracy Scores: Tracks how often linked or dependent requirements remain aligned over versions.

- Conflict Resolution Effectiveness: Evaluates the rate and speed at which inconsistencies are identified and resolved.

Table 4: Key Consistency Metrics

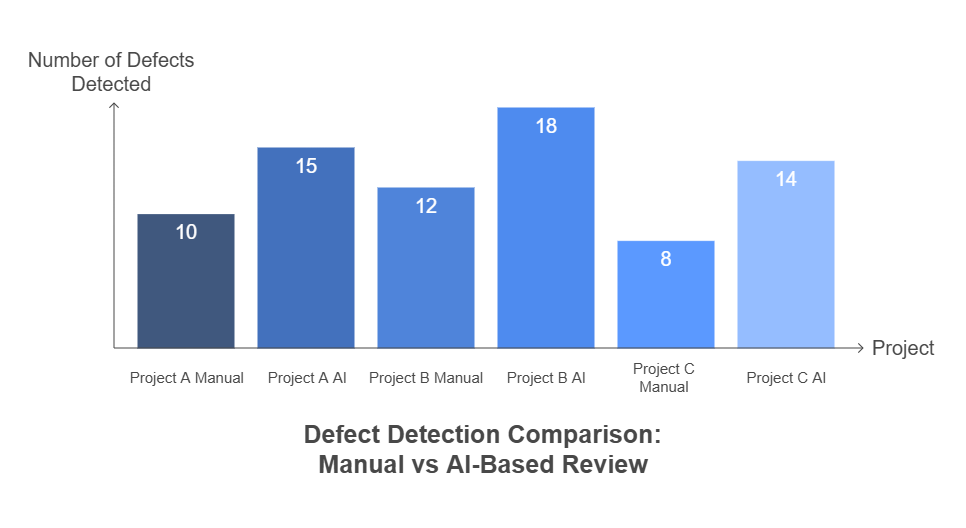

Validation Effectiveness Measures

Ultimately, the purpose of AI validation is to prevent defects, reduce rework, and accelerate development.

- Defect Detection Rates: Tracks number of issues detected by AI vs. manual review.

- False Positive/Negative Analysis: Assesses accuracy of AI-generated suggestions to build user trust.

- Time-to-Resolution Improvements: Measures time saved between defect identification and resolution.

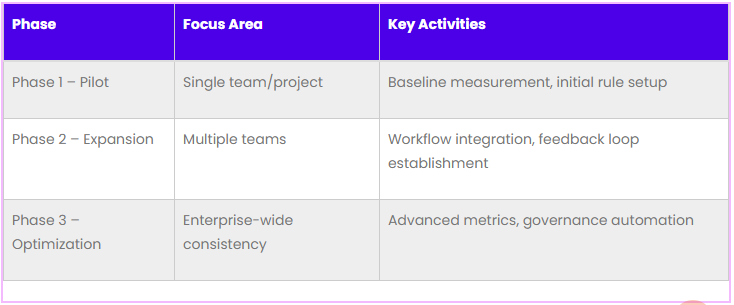

Implementation Strategy for Quality Improvement

Having explored the technical foundation and benefits of AI-based validation, the next step is to develop a pragmatic implementation strategy. A phased rollout aligned with team readiness, tool maturity, and organizational goals ensures a smooth transition and sustainable improvement.

Pilot Program Design

Starting small allows organizations to measure results, gather feedback, and fine-tune their approach before enterprise-wide adoption.

- Quality Baseline Establishment: Use historical requirement sets to calculate initial quality scores and identify current gaps.

- Validation Rule Configuration: Tailor AI rules to align with project domain, terminology, and regulatory requirements.

- Success Criteria Definition: Establish metrics to evaluate impact—e.g., increase in clarity scores, defect reduction, review time savings.

Table 5: Rollout Phase Objectives

Training and Adoption

People remain central to the success of any AI-based initiative. Equipping teams with the knowledge and skills to use validation tools is critical.

- Quality Awareness Programs: Educate stakeholders on the cost of poor requirements and how AI can address gaps.

- AI Validation Tool Training: Offer hands-on workshops and documentation to teach teams how to interpret and act on validation results.

- Best Practice Development: Document learnings and create reusable templates, checklists, and reference models.

Case Studies: Quality and Consistency Improvements

To illustrate the tangible benefits of AI-based requirement validation, this section highlights real-world examples from diverse project types. These case studies demonstrate measurable improvements in requirement quality, consistency, and downstream project outcomes.

Case Study 1: Enterprise Software Development

Challenge: A global software provider struggled with requirement ambiguity and late-stage defect discovery across multiple agile teams.

AI-Based Validation Approach:

- Implemented NLP-powered validation tool within their Jira workflow

- Tracked dimensional quality scores sprint-over-sprint

- Used automated recommendations to revise ambiguous requirements before development

Outcomes:

- 34% improvement in clarity scores over 3 months

- 27% reduction in requirement-related defects

- Average requirement review time reduced by 45%

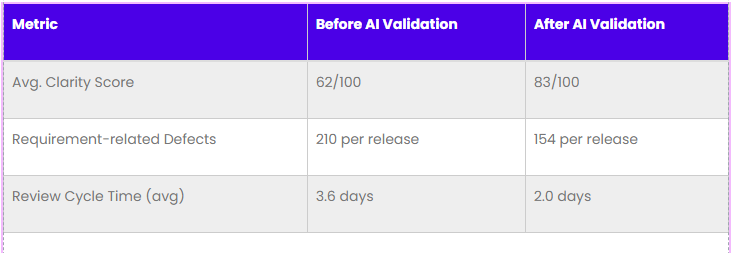

Table 6: Pre/Post Implementation Metrics

Case Study 2: Regulatory Compliance Projects

Challenge: A healthcare software firm faced compliance risks due to incomplete and inconsistent requirements in FDA-regulated modules.

AI-Based Validation Approach:

- Applied completeness validation across all compliance modules

- Standardized terminology using AI-generated glossary enforcement

- Aligned requirement sets with validation templates and traceability rules

Outcomes:

- 46% increase in completeness scores

- Compliance audit passed 100% on requirement traceability

- Regulatory documentation preparation time reduced by 38%

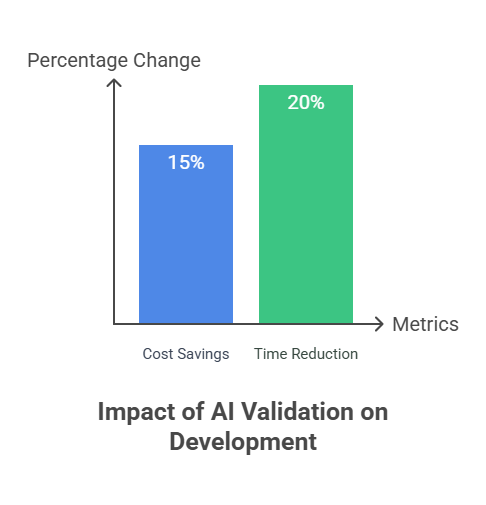

ROI of Quality-Focused AI Validation

Adopting AI-based validation delivers quantifiable returns by enhancing requirement quality, reducing rework, and accelerating product delivery. Beyond operational gains, it contributes to long-term strategic value through capability building and competitive differentiation.

Quality Improvement Value

High-quality requirements reduce errors, minimize rework, and shorten development timelines.

- Reduced Rework Costs: Fewer ambiguities and defects mean less time spent reinterpreting, redesigning, or retesting functionality.

- Faster Development Cycles: Clear, complete, and testable requirements allow for parallel development and automation readiness.

- Lower Testing Costs: Improved testability leads to more automated test cases and earlier defect detection

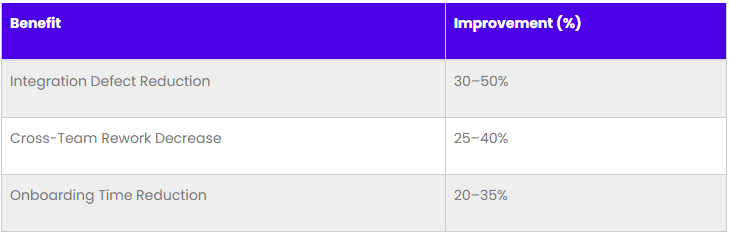

Consistency Benefits

AI-driven consistency ensures alignment across teams and systems, which translates into better collaboration and more predictable outcomes.

- Reduced Integration Issues: Cross-functional validation catches interface misalignments early.

- Improved Team Collaboration Efficiency: Common terminology and reduced contradiction streamline communication.

- Faster Onboarding: Standardized language and clearer documentation speed up new team member productivity.

Table 7: Collaboration and Consistency ROI

Empathy-driven development creates positive impact beyond immediate business outcomes. Products built with genuine user understanding contribute to:

- Digital inclusion by designing for diverse user needs and capabilities

- Reduced digital frustration that improves overall quality of life for millions of users

- More efficient resource utilization through products that solve real problems rather than creating new ones

- Enhanced human productivity through tools that complement rather than complicate human workflows

- Stronger social connections through technology that facilitates rather than replaces human interaction

Best Practices for Quality and Consistency Validation

While AI-based tools offer powerful capabilities, their effectiveness depends on how organizations adopt, govern, and evolve their use. Establishing best practices ensures consistent value realization and continuous improvement.

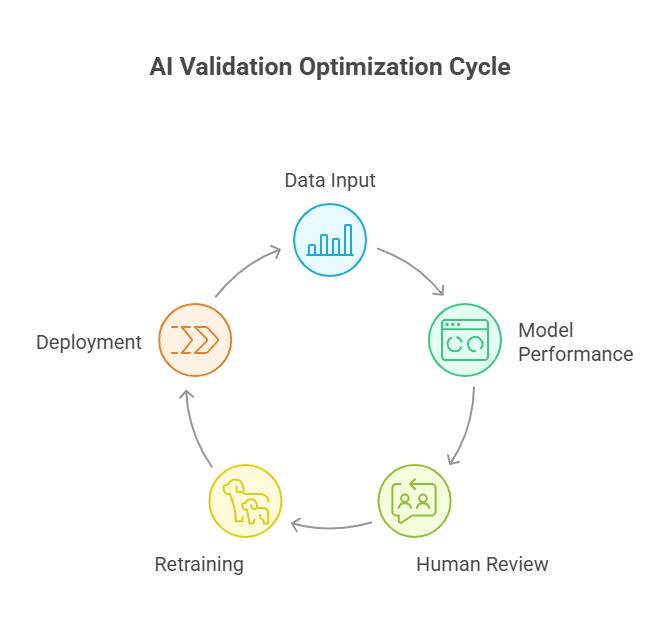

AI Validation Optimization

AI systems improve with usage, but only when organizations actively guide their tuning and governance.

- Model Training and Tuning: Use organization-specific examples to fine-tune NLP models for greater accuracy and contextual relevance.

- Validation Rule Refinement: Regularly update rule sets based on domain needs, regulatory changes, or project complexity.

- Performance Monitoring and Adjustment: Track false positives/negatives and user feedback to iteratively improve system output.

By adopting these best practices, organizations ensure that AI validation delivers sustainable value—not just improved documents, but a foundation for high-performing, quality-driven teams.

Conclusion and Recommendations

As organizations pursue digital excellence, the quality and consistency of requirements remain foundational to successful software delivery. Traditional validation approaches, constrained by manual effort and subjectivity, are no longer sufficient to manage the scale, speed, and complexity of modern development.

AI-based validation presents a transformative opportunity to elevate requirements engineering. By combining the precision of natural language processing with the scalability of machine learning, these tools enable proactive, data-driven, and real-time quality assurance.

Key Success Factors for Quality Improvement

To realize the full potential of AI validation, organizations should prioritize the following:

- Strategic Alignment: Integrate AI validation into your quality goals, compliance mandates, and product delivery strategies.

- Workflow Integration: Embed validation into requirement authoring tools, review cycles, and approval workflows.

- User Enablement: Invest in training, change management, and feedback loops to ensure adoption and continuous improvement.

Implementation Roadmap for AI Validation

- Phase 1: Assess & Baseline

Evaluate current requirement quality, select pilot areas, and configure AI validation tools. - Phase 2: Embed & Expand

Integrate validation into daily workflows, monitor results, and expand across teams. - Phase 3: Optimize & Institutionalize

Refine models, scale governance, and drive organizational learning through data insights

Strategic Investment Priorities

Organizations looking to gain competitive advantage through quality should invest in:

- AI-augmented quality platforms with extensible APIs and ML feedback loops

- Terminology governance tools to ensure cross-team alignment

- Quality dashboards and metrics frameworks for leadership visibility

AI-based validation is not merely a tool—it is a strategic lever to shift from reactive quality control to proactive excellence. By embedding intelligence into the earliest phase of the software lifecycle, organizations reduce risk, accelerate delivery, and build better products.

Appendices

Appendix A: Quality Assessment Criteria and Checklists

- Completeness evaluation frameworks

- Clarity assessment guidelines

- Testability verification methods

- Correctness validation approaches

Appendix B: Consistency Validation Rules and Templates

- Terminology standardization rules

- Cross-reference validation templates

- Conflict detection algorithms

- Resolution process guidelines

Appendix C: AI Validation Tool Comparison Matrix

- Feature comparison frameworks

- Vendor evaluation criteria

- Selection decision templates

- Implementation planning guides

Appendix D: Quality Metrics Calculation Methods

- Scoring algorithm specifications

- Trend analysis methodologies

- Benchmark comparison approaches

- ROI calculation templates

Appendix E: Implementation Planning Templates

- Project planning frameworks

- Resource allocation guides

- Timeline estimation methods

- Risk management templates

Appendix F: Case Study Detailed Metrics and Analysis

- Comprehensive case study data

- Before/after comparisons

- Lessons learned documentation

- Best practice recommendations

Author