Table of Contents

AI Code Assistants: A Strategic Guide to Boost Engineering Productivity

Executive Summary

The software development landscape has fundamentally transformed. Modern engineering organizations face unprecedented pressure to accelerate delivery cycles while maintaining code quality and managing increasingly complex systems. The window for competitive advantage is narrowing rapidly—as AI-powered code completion tools mature and talent constraints intensify, early adopters are establishing decisive market positions.

AI code assistants are transforming how engineering teams build, test, and deliver software—ushering in a new era of developer productivity and code quality. These tools go far beyond autocomplete, offering intelligent code generation, context-aware documentation, and security-aware development support.

The market for AI code assistants is projected to grow from $4.91 billion in 2024 to $30.1 billion by 2032—representing a 27.1% compound annual growth rate.

Recent enterprise deployments demonstrate measurable impact: Accenture reported 96% success rates among initial users, with 67% of developers using AI completion tools at least five days per week. GitHub’s research shows developers complete tasks faster—especially repetitive ones—while maintaining or improving code quality.

This whitepaper provides engineering decision-makers with a comprehensive framework for evaluating, implementing, and scaling AI-powered code completion across diverse industry contexts. Key findings include:

- Quantifiable productivity gains: 20-40% reduction in coding time for routine tasks

- Enhanced code quality: 30% fewer static analysis findings in controlled deployments

- Accelerated onboarding: New developers achieving productivity 20% faster

- Cross-industry applicability: Proven value across technology, finance, healthcare, and manufacturing sectors

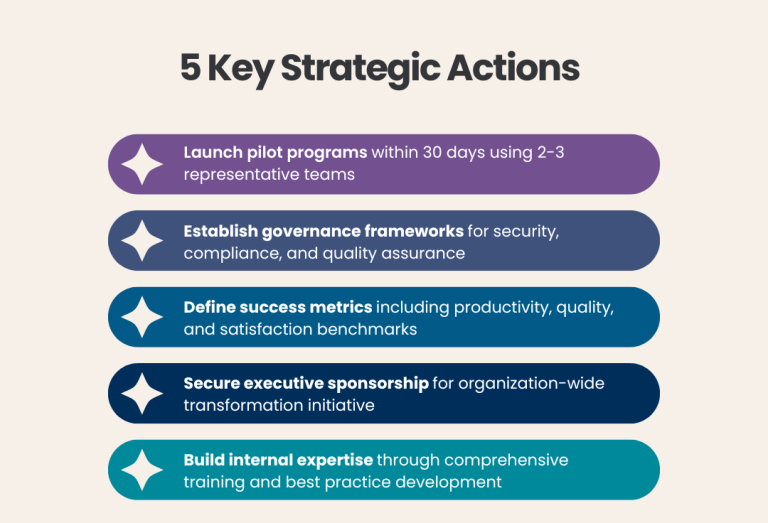

Immediate Strategic Actions for Engineering Leaders:

Market Context and Business Imperative

The Developer Productivity Crisis

Contemporary software development faces systemic challenges that directly impact business outcomes. Engineering teams report spending 41% of their time on maintenance and technical debt, while context switching between projects reduces productivity by an estimated 23 minutes per interruption. The average software engineer requires 6-8 weeks to achieve full productivity in new codebases, representing significant opportunity costs in competitive markets.

Market Dynamics and Adoption Trends

The AI code completion market has reached an inflection point. Current adoption rates show 78% of organizations using AI in at least one business function, up from 55% in 2023. Among technology-forward companies, 22% have implemented comprehensive AI strategies and are realizing substantial value, while only 4% have achieved cutting-edge capabilities across all functions.

North American enterprises lead global investment in generative AI applications, accounting for over 50% of total market revenue. This geographic concentration creates both opportunities and competitive pressures for domestic engineering organizations.

Industry-Specific Drivers

Technology Sector: Rapid product iteration cycles and scalability requirements drive demand for development acceleration tools.

Financial Services: Regulatory compliance and risk management necessitate consistent, auditable code quality—areas where AI completion excels.

Healthcare: HIPAA compliance and patient safety requirements demand error reduction and standardized development practices.

Manufacturing: IoT and embedded systems development benefits from AI-assisted protocol standardization and domain knowledge transfer.

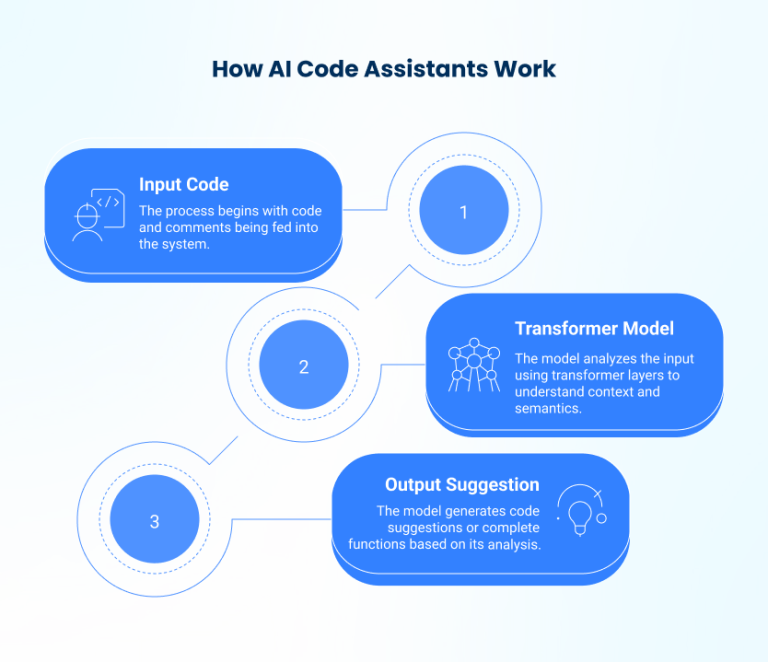

How AI Code Assistants Work: Powered by Transformer Models

What Makes AI Code Completion Different

Modern AI code completion systems leverage transformer-based large language models trained on extensive code repositories. These systems process contextual information including:

- Active codebase analysis: Real-time parsing of project structure, dependencies, and architectural patterns

- Semantic understanding: Recognition of coding intent through comments, variable names, and function signatures

- Historical pattern recognition: Learning from organizational coding practices and style guidelines

- Multi-language intelligence: Cross-language pattern transfer and API suggestion

What this means for you: Unlike traditional autocomplete tools that only suggest syntax, AI assistants understand the broader context of what you’re building and can generate entire functions that align with your architecture and coding standards.

Next-Generation Development Features

Leading platforms have evolved beyond simple autocompletion to provide:

- Intelligent code generation: Complete function implementations from natural language descriptions

- Contextual documentation: Automatic generation of inline comments and documentation

- Refactoring assistance: Structured code improvement suggestions

- Security vulnerability detection: Real-time identification of common security antipatterns

Business Impact: These features reduce time spent on low-value work and accelerate delivery cycles by automating routine tasks while maintaining quality standards.

Enterprise-Ready Integration Points

Successful deployments require seamless integration with existing development infrastructure:

- IDE Integration: Native support for Visual Studio Code, JetBrains IDEs, and enterprise development environments

- CI/CD Pipeline Integration: Automated code quality checks and suggestion validation

- Version Control Systems: Git-based workflow optimization and merge conflict reduction

- Security Scanning: Integration with static analysis tools and vulnerability management platforms

Implementation Insight: Organizations with mature DevOps practices see faster AI tool adoption and higher success rates due to existing automation and quality frameworks.

Business Impact of AI Code Assistants

Productivity Metrics

Recent research provides compelling evidence of measurable productivity improvements:

GitHub Copilot Enterprise Study (2024):

- 33% average acceptance rate for AI suggestions

- 20% of total lines of code generated through AI assistance

- 72% developer satisfaction scores across medium-scale deployments

Accenture Enterprise Deployment:

- 96% success rate among initial adopters

- 43% found the tool “extremely easy to use”

- 67% usage rate (5+ days per week) indicating sustained adoption

Industry Benchmarks:

- 25-40% reduction in repetitive coding tasks

- 15-30% decrease in code review cycles

- 20% acceleration in new developer onboarding timelines

Quality Improvements

Beyond productivity, AI completion tools demonstrate measurable quality enhancements:

- Reduced Defect Rates: 30% fewer static analysis findings in controlled studies

- Consistency Improvements: Standardized coding patterns across teams and projects

- Security Enhancement: Proactive identification of common vulnerability patterns

- Documentation Quality: Improved inline documentation and code readability

Cost-Benefit Analysis

For a 50-developer engineering team, typical annual benefits include:

- Time Savings: 2,000-3,000 hours annually (valued at $150,000-$225,000)

- Quality Improvements: 20-30% reduction in post-deployment defects

- Onboarding Acceleration: 40-60 hours saved per new hire

- Knowledge Transfer: Reduced dependency on senior developer time for mentoring

Tool licensing costs typically range from $10-$39 per developer per month, representing a 5:1 to 15:1 return on investment for most organizations.

Real-World Success Stories Across Industries

Technology and SaaS: Rapid Prototype to Production

A mid-sized customer analytics platform was struggling with API development bottlenecks that delayed feature releases by 2-3 weeks per quarter. Development teams spent excessive time on boilerplate code and integration patterns.

After implementing GitHub Copilot across three development teams:

- 25% reduction in code review iterations due to more consistent, idiomatic code

- 15% faster feature delivery cycles with reduced time spent on routine implementation

- Improved developer satisfaction: “Helps me focus on solving business problems instead of remembering syntax”

Key Success Factor: Starting with internal tooling before customer-facing features allowed teams to build confidence and establish best practices.

Financial Services: Compliance-First Development

A regional fintech company faced regulatory pressure to reduce software defects in loan processing systems while accelerating product development to compete with larger institutions.

Their Amazon CodeWhisperer deployment focused on critical financial calculations and compliance workflows:

- 20% faster onboarding for new engineering hires through consistent coding patterns

- Significant reduction in compliance-related coding errors via standardized validation patterns

- Enhanced audit readiness with improved code documentation and consistency

- Successful integration with proprietary banking APIs through customized suggestion models

Key Success Factor: Implementing comprehensive code review processes specifically for AI-generated financial logic ensured regulatory compliance while capturing productivity benefits.

Healthcare IT: Security and Standards at Scale

A healthcare IT provider managing patient data across 50+ hospital systems needed to maintain HIPAA compliance while accelerating development of patient portal features during the COVID-19 surge.

TabNine Enterprise deployment emphasized data security and coding consistency:

- 30% improvement in input sanitization consistency across Python, Java, and C# codebases

- Enhanced cross-team knowledge sharing through standardized security patterns

- Faster compliance audits due to consistent coding practices and automated documentation

- Zero data privacy incidents related to AI tool usage through on-premises deployment

Key Success Factor: On-premises deployment and strict data governance policies enabled AI benefits while maintaining absolute control over sensitive healthcare data.

Manufacturing: Embedded Systems Innovation

An industrial automation vendor developing IoT sensors for manufacturing equipment faced challenges standardizing communication protocols across distributed development teams in three countries.

Their multi-tool approach using GitHub Copilot for firmware development delivered:

- 40% faster firmware prototyping cycles through C-based intelligent code suggestions

- Standardized communication protocols reducing integration errors between hardware components

- Improved domain knowledge transfer enabling junior developers to work effectively on complex embedded systems

- Reduced documentation burden through automated inline commenting for complex algorithms

Key Success Factor: Focusing on protocol standardization and leveraging AI for hardware abstraction layer development created measurable efficiency gains in complex embedded environments.

Strategic Implementation Framework

Phase 1: Assessment and Planning (4-6 weeks)

Current State Analysis:

- Developer productivity baseline measurement

- Code quality metrics establishment

- Technology stack compatibility assessment

- Security and compliance requirement evaluation

Pilot Team Selection:

- Choose 2-3 teams with varying skill levels and project types

- Ensure representation across different programming languages

- Select teams with measurable productivity metrics

- Include both junior and senior developers

Success Criteria Definition:

- Quantitative metrics: cycle time, defect rates, code review duration

- Qualitative measures: developer satisfaction, learning curve assessment

- Business impact: feature delivery velocity, technical debt reduction

Phase 2: Pilot Deployment (8-12 weeks)

Tool Selection and Configuration:

- Evaluate leading platforms (GitHub Copilot, Amazon CodeWhisperer, TabNine Enterprise)

- Configure security policies and compliance settings

- Establish integration with existing development tools

- Implement monitoring and analytics capabilities

Training and Enablement:

- Conduct comprehensive onboarding sessions

- Develop best practice guidelines

- Establish feedback collection mechanisms

- Create internal knowledge sharing forums

Measurement and Optimization:

- Weekly productivity metric collection

- Monthly satisfaction surveys

- Continuous feedback integration

- Iterative policy refinement

Phase 3: Controlled Expansion (12-16 weeks)

Gradual Rollout Strategy:

- Expand to additional teams based on pilot success

- Maintain 2:1 ratio of trained to new users during expansion

- Implement peer mentoring programs

- Establish centers of excellence

Governance Framework Development:

- Code review policy integration

- Security scanning automation

- Compliance audit procedures

- Performance monitoring dashboards

Phase 4: Enterprise Scaling (Ongoing)

Organization-wide Deployment:

- Standardized onboarding processes

- Automated provisioning and configuration

- Advanced analytics and reporting

- Continuous improvement programs

Cultural Integration:

- Developer advocacy programs

- Success story sharing

- Advanced training curricula

- Innovation challenge initiatives

Risk Management and Governance

Security and Legal Risk Management

Data Protection and Intellectual Property:

- Implement code redaction for sensitive information

- Establish clear data residency requirements

- Configure appropriate access controls and audit logging

- Regular security assessments of AI-generated code

Critical Warning: Unchecked AI usage could introduce serious legal risk if proprietary code is unintentionally reused or if AI suggestions incorporate copyrighted material. Organizations must implement automated scanning and manual review processes to detect potential intellectual property violations.

Intellectual Property Management:

- Develop clear policies for AI-suggested code ownership

- Implement automated license compliance checking

- Establish procedures for handling copyrighted code patterns

- Mandatory legal review of AI tool usage agreements before deployment

Quality Assurance Framework:

- Non-negotiable: Mandatory human review for all AI-generated code

- Automated testing requirements for AI suggestions

- Static analysis integration for security vulnerability detection

- Performance impact assessment protocols

Continuous Monitoring:

- Real-time quality metrics collection

- Automated detection of AI-generated code issues

- Regular accuracy assessments of AI suggestions

- Feedback loop implementation for model improvement

Ethical AI Development Standards

Preventing Developer Skill Atrophy:

- Maintain mandatory skill development programs

- Implement regular “AI-free” development exercises

- Encourage critical thinking and deep code understanding

- Regular assessment of AI impact on learning and growth

Bias and Fairness:

- Monitor AI suggestions for potential bias in code patterns

- Implement diverse training data practices where possible

- Regular algorithmic fairness assessments

- Promote inclusive development practices beyond AI suggestions

Vendor Evaluation and Selection

Platform Selection Guide by Organization Type

| Criteria | Weight | GitHub Copilot | Amazon CodeWhisperer | TabNine Enterprise |

|---|---|---|---|---|

| Security & Compliance | 25% | ✅ Enterprise SSO, audit logs | ✅✅ AWS-native security, code redaction | ✅✅✅ On-premises deployment option |

| Language Support | 20% | ✅✅ 20+ languages, broad framework support | ✅ Java, Python, JavaScript focus | ✅✅ 20+ languages, extensive library support |

| IDE Integration | 15% | ✅✅ VS Code, JetBrains native | ✅ VS Code, JetBrains, command line | ✅✅ VS Code, JetBrains, Vim, Emacs |

| Customization | 15% | ⚠️ Limited organizational training | ✅✅ Custom model fine-tuning | ✅✅✅ Extensive customization options |

| Cost Structure | 10% | ✅ $19-39/user/month | ✅✅ Usage-based pricing | ⚠️ Enterprise licensing |

| Enterprise Features | 10% | ✅ Admin dashboard, usage analytics | ✅✅ CloudTrail integration, VPC support | ✅✅ Advanced admin controls |

| Performance | 5% | ✅✅ High suggestion accuracy | ✅✅ Fast response times | ✅ Offline capability |

Quick Selection Guide:

- Startups/Scale-ups: GitHub Copilot for rapid deployment and developer familiarity

- Mid-Market Enterprises: Amazon CodeWhisperer for balanced security and customization

- Large Enterprises: TabNine Enterprise for maximum control and on-premises deployment

Selection Criteria by Organization Type

Startup/Scale-up Organizations:

- Prioritize ease of deployment and cost-effectiveness

- GitHub Copilot often provides optimal balance

- Focus on developer productivity over advanced governance

Mid-Market Enterprises:

- Balance between functionality and administrative control

- Consider hybrid approaches with multiple tools

- Emphasize integration with existing toolchains

Large Enterprises:

- Prioritize security, compliance, and customization

- TabNine Enterprise for maximum control

- Amazon CodeWhisperer for AWS-native environments

Measuring Success and ROI

Key Performance Indicators

Productivity Metrics:

- Cycle Time: Time from code commit to production deployment

- Code Generation Rate: Percentage of code generated through AI assistance

- Review Efficiency: Average time spent in code review processes

- Feature Velocity: Number of features delivered per sprint/iteration

Quality Metrics:

- Defect Density: Bugs per thousand lines of code

- Security Vulnerability Rate: Security issues identified in AI-generated code

- Code Maintainability: Cyclomatic complexity and technical debt metrics

- Test Coverage: Percentage of AI-generated code covered by automated tests

Developer Experience Metrics:

- Satisfaction Scores: Regular developer survey results

- Adoption Rate: Percentage of eligible developers actively using AI tools

- Learning Curve: Time required for new developers to become productive

- Retention Impact: Correlation between AI tool usage and developer retention

ROI Calculation Framework

Direct Benefits:

- Developer time savings (hours per week × hourly rate)

- Reduced bug fixing costs (defects avoided × average fix cost)

- Faster feature delivery (time-to-market improvements)

- Improved onboarding efficiency (reduced mentoring time)

Indirect Benefits:

- Enhanced developer satisfaction and retention

- Improved code consistency and maintainability

- Reduced technical debt accumulation

- Enhanced competitive positioning

Cost Components:

- Tool licensing fees

- Training and onboarding costs

- Infrastructure and integration expenses

- Ongoing support and maintenance

Sample ROI Calculation (50-developer team):

- Annual productivity gains: $300,000

- Quality improvement savings: $75,000

- Tool licensing costs: $30,000

- Implementation costs: $25,000

- Net ROI: 445% over first year

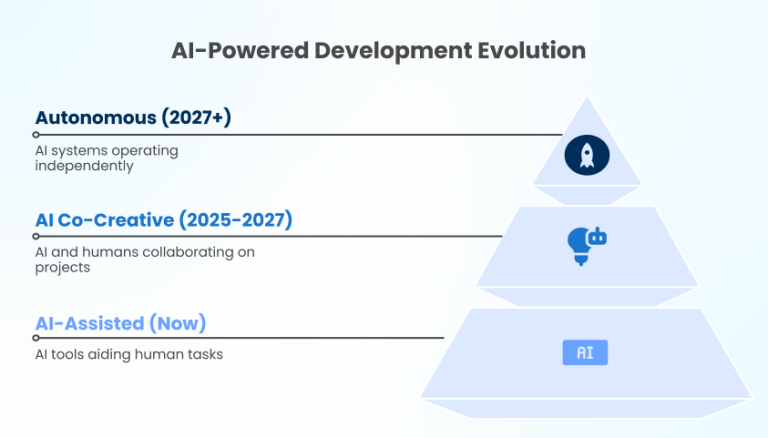

The Evolution of AI-Augmented Development

Three-Phase Development Evolution

Technology Evolution Trends

Phase 1: AI-Assisted Development (Current State) Current capabilities focus on accelerating individual developer tasks through intelligent code completion, documentation generation, and basic error detection.

Phase 2: AI Co-Creative Development (2025-2027)

- Natural language to code translation: Complete feature implementation from business requirements

- Automated testing and quality assurance: AI-generated test suites and quality validation

- Intelligent refactoring and optimization: System-wide code improvement recommendations

- Cross-platform code generation: Automatic adaptation of code across different environments

Phase 3: Autonomous Development (2027+)

- End-to-end feature delivery: From user story to production deployment

- Self-healing systems: Automatic bug detection, fixing, and deployment

- Intelligent architecture evolution: AI-driven system design and optimization

- Predictive maintenance: Proactive identification and resolution of technical debt

Strategic Implication: Organizations establishing AI development capabilities now will be positioned to leverage more advanced automation as it becomes available, while competitors struggle with basic implementation challenges.

Organizational Transformation Requirements

Role Evolution Timeline:

- 2024-2025: Shift from code writing to system design and architecture

- 2025-2027: Enhanced emphasis on AI-human collaboration and code validation

- 2027+: Focus on business logic orchestration and AI system management

Critical Skill Development:

- AI prompt engineering and optimization techniques

- Advanced code quality assessment and validation methodologies

- System architecture and design thinking capabilities

- Cross-functional collaboration and stakeholder management

Strategic Recommendations

Short-term (6-12 months):

- Complete pilot deployments and success measurement

- Establish governance frameworks and best practices

- Build internal expertise and training programs

- Plan for controlled expansion across organization

Medium-term (1-2 years):

- Achieve organization-wide adoption and optimization

- Develop advanced customization and integration capabilities

- Establish AI-augmented development as competitive advantage

- Measure and communicate business impact

Long-term (2-5 years):

- Pioneer next-generation AI development tools

- Develop industry-leading practices and thought leadership

- Explore autonomous development capabilities

- Create AI-native development culture and processes

Strategic Action Plan for Engineering Leaders

The integration of AI-powered code completion tools represents a strategic imperative for modern engineering organizations. With demonstrated productivity improvements ranging from 20-40% and quality enhancements of up to 30%, these tools provide measurable business value across diverse industry contexts.

“The future belongs to companies that can harness artificial intelligence to amplify human potential, not replace it.” – Satya Nadella, CEO, Microsoft

The market opportunity is substantial, with the AI code tools sector projected to grow from $4.91 billion in 2024 to $30.1 billion by 2032. Early adopters are already realizing competitive advantages through faster delivery cycles, improved code quality, and enhanced developer satisfaction.

“Engineering teams that embrace AI-assisted development today will define the productivity standards of tomorrow.” – Industry Research, 2024

Immediate 30-Day Action Plan:

Week 1-2: Assessment and Preparation

- Conduct Internal Assessment: Evaluate current developer productivity metrics and identify optimization opportunities

- Stakeholder Alignment: Secure executive sponsorship and budget allocation for pilot programs

- Team Selection: Identify 2-3 representative teams for controlled deployments

Week 3-4: Pilot Initiation 4. Tool Evaluation: Complete vendor assessment and select initial platform 5. Security Framework: Establish governance policies for AI tool usage and code review 6. Training Program: Develop comprehensive onboarding curriculum and best practices

90-Day Success Metrics:

- Productivity Improvement: 15-25% reduction in routine coding tasks

- Quality Enhancement: 20% fewer code review iterations

- Developer Satisfaction: 80%+ positive feedback on AI tool usage

- Adoption Rate: 70%+ regular usage among pilot team members

Long-Term Competitive Positioning:

6-Month Milestones:

- Organization-wide deployment across all development teams

- Measurable business impact through accelerated delivery cycles

- Established internal expertise and best practice documentation

12-Month Vision:

- Industry-leading development productivity metrics

- AI-augmented development as a key competitive differentiator

- Foundation for next-generation autonomous development capabilities

The Decision Point: Engineering leaders who act decisively to implement AI-powered code completion will not just adapt to industry changes—they will define the new standard for productivity and innovation. The question isn’t whether AI will transform software development, but whether your organization will lead or follow this transformation.

The time for strategic action is now. Your competitive future depends on the decisions you make today.

Appendix A: Implementation Checklist

Pre-Implementation Phase

- [ ] Establish baseline productivity metrics

- [ ] Conduct security and compliance assessment

- [ ] Define success criteria and measurement framework

- [ ] Select pilot teams and use cases

- [ ] Obtain necessary approvals and budget allocation

Pilot Phase

- [ ] Configure tool integrations and security settings

- [ ] Conduct comprehensive team training

- [ ] Implement monitoring and feedback collection

- [ ] Regular progress reviews and optimization

- [ ] Document lessons learned and best practices

Scaling Phase

- [ ] Develop standardized onboarding processes

- [ ] Create governance policies and procedures

- [ ] Establish centers of excellence

- [ ] Implement advanced analytics and reporting

- [ ] Plan for continuous improvement and evolution

Success Measurement

- [ ] Weekly productivity metric collection

- [ ] Monthly developer satisfaction surveys

- [ ] Quarterly business impact assessment

- [ ] Annual strategic review and planning

- [ ] Continuous benchmark comparison and optimization

Contact us to explore how we can help you build a future-ready, AI-powered QA strategy tailored for your industry.

Author

-

Neha Adapa