Table of Contents

Building Resilient and Hyper-Personalized Lending Platforms: An Engineering Guide to ML-Driven Origination and Dynamic Pricing at Scale

Executive Summary The Future of Lending is Real-Time, AI-Native, and Infrastructure-Centric

The next decade of lending is a race — and AI-native platforms are already pulling ahead. By 2027, over 70% of lending decisions will be powered by real-time AI systems that approve, price, and adapt in seconds. Early adopters are seeing 2–3x approval lift and 40% faster time-to-market for new lending products — without increasing risk.

Compute efficiency, sub-150ms decisioning, and infrastructure built for adaptive intelligence will define the winners. The lenders who delay will face eroding market share as faster, more personalized competitors capture their borrowers.

Incumbents are constrained by batch-oriented architectures, legacy scoring models, and hardcoded rule engines that cannot adapt to volatility or personalization demands. In contrast, a new breed of lending platforms is emerging — systems engineered to ingest high-velocity data, perform low-latency inference, and dynamically price credit risk with continuous intelligence loops.

This whitepaper is a technical manifesto for engineering leaders building resilient, hyper-personalized, and revenue-maximizing lending platforms. We unpack the architectural stack, machine learning pipelines, real-time decisioning systems, and MLOps frameworks required to operationalize AI at industrial scale.

Our thesis is simple: machine learning isn’t an add-on — it is the operating system of next-gen lending. Done right, it delivers a measurable 50% revenue lift, 60% operational efficiency gain, and significantly higher borrower satisfaction through dynamic, explainable, and risk-adjusted personalization.

Key Outcomes of ML-Native Lending Architectures:

- >50% Revenue Uplift: via reinforcement learning–driven dynamic pricing models that optimize LTV/CAC ratios at the segment-of-one level.

- <150ms Decision Latency: for real-time credit decisions powered by containerized microservices and event-driven data pipelines.

- End-to-End Auditability: from raw data lineage to model explainability (XAI), fully compliant with GDPR, CCPA, and OCC guidelines.

- Elastic Scalability: through stateless services, auto-scaling model endpoints, and streaming-first architectures.

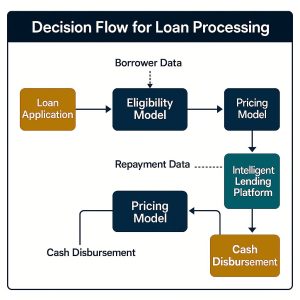

Building AI-Powered Lending Platforms: A Streamlined Approach

When you’re building an AI-driven lending platform, you’re creating a digital loan officer that makes split-second lending decisions at scale. The key is building a system that’s modular, real-time, and fault-tolerant while supporting continuous learning.

Data Foundation: The Core Engine

The heart of an AI lending platform is its real-time data engine — the system that determines whether you approve a loan in milliseconds or miss the opportunity entirely. For lenders, every 100ms of delay can mean abandoned applications, lost revenue, and damaged borrower trust.

This “traffic control system” manages high-velocity data streams — from borrower applications and credit bureau updates to live transaction feeds — ensuring the models always act on the freshest, most reliable information.

Kafka or Pulsar handle the constant flow of borrower applications, credit bureau updates, bank transactions, and third-party enrichments. These platforms process millions of events per second, ensuring no data gets lost even during peak application periods.

Feature Store: Maintaining AI Consistency

Your feature store (Feast or Hopsworks) acts as the platform’s memory bank, storing processed borrower features. The critical aspect is online/offline feature parity – ensuring your AI model sees identical data during training and live decision-making. This prevents the common problem where models perform well in testing but fail in production.

Real-Time Processing Pipeline

Apache Flink or Spark Structured Streaming handle streaming feature transforms, acting like data scientists working 24/7. When a bank transaction arrives, these systems instantly calculate income patterns, spending behavior, and debt ratios while the application is being processed.

Architecture Management

The deterministic feature registry enforces data quality through schema contracts, preventing malformed data from contaminating your models. Meanwhile, orchestration tools like Flyte or Metaflow create lineage-aware workflows that track every data transformation.

This lineage tracking becomes crucial for regulatory compliance – you can trace any lending decision back to its original data sources with full version control and metadata.

Scale and Performance Requirements

Modern lending platforms operate at terabyte-scale across multiple regions while maintaining millisecond-latency response times. The architecture must be fault-tolerant (downtime equals lost revenue) and support continuous model retraining as borrower behavior and economic conditions evolve.

This foundation enables AI lending platforms to discover patterns human underwriters might miss while maintaining the reliability and explainability that financial regulations demand. You’re not just automating existing processes – you’re creating entirely new capabilities for understanding creditworthiness at unprecedented speed and scale.

Decision Intelligence: The Real-Time Brain of Lending

AI lending platforms are no longer passive scoring engines — they are real-time decision systems that combine human-grade judgment with machine-speed precision. In practice, this means:

- <150ms approvals during peak traffic

- Dynamic pricing that adjusts by borrower segment-of-one

- Audit-ready decisions that regulators can trust

The policy runtime fuses compliance rules, risk scoring, fraud detection, and pricing optimization into a single millisecond-scale execution layer — making it the brain of your lending business.

It fuses hardcoded compliance rules with machine-learned predictions and dynamic pricing logic — all within a single execution layer that responds in milliseconds.

Deterministic Policy Filters

Every decision starts with the non-negotiables. Think income thresholds, DTI ratios, and jurisdiction-specific regulations. Engines like Drools enforce these rules instantly, acting as the platform’s legal gatekeepers. Applications that fail here are blocked without wasting compute on unnecessary model calls.

Model Inference Infrastructure

Once an application clears the hard rules, the machine learning models take over. These models — deployed through low-latency inference servers like ONNX or Triton — evaluate risk, fraud, affordability, and even pricing optimization in real time. It’s like having a team of risk analysts, fraud examiners, and pricing actuaries scoring every file simultaneously — and doing it under 150 milliseconds.

Modular Decision Graphs

AI decisions aren’t made in one shot — they’re processed through a modular decision graph. Picture a directed pipeline where each module handles a different decision component: fraud check, affordability analysis, eligibility scoring, pricing strategy. The system can short-circuit any path if a blocker is hit, saving time and reducing system load.

Version-Controlled Policy Trees

No decision logic is static. That’s why modern AI lending platforms treat policies like code — fully versioned, testable, and revertible. Policy trees and model configurations are stored in Git-like systems, allowing for A/B testing, rollback, and regulatory traceability. Every decision can be traced back to the exact version of the model and rules that produced it.

Built for Audits, Designed for Speed

Every decision node emits detailed logs: input features, SHAP explanations, confidence scores, overrides. These aren’t just for internal debugging — they form the audit trail regulators now expect. When you’re issuing financial decisions at scale, explainability and accountability are table stakes.

This decision architecture turns an AI lending platform into more than just automation — it becomes a real-time operating system for credit. One that moves fast, explains itself clearly, and never forgets how it made a decision.

Microservices Architecture: Building for Speed, Scale, and Separation

When you’re scaling an AI-native lending platform, monoliths break under pressure. You need a modular system that moves fast, isolates risk, and scales without losing control. Microservices give you that backbone.

Kubernetes as Core Infrastructure

Your platform runs on Kubernetes with service meshes like Istio or Linkerd handling secure communication, observability, and traffic control — essential for regulated environments.

Domain-Driven Services

You split functionality into self-contained services — Origination, Scoring, Pricing, Fraud. Each owns its logic, data, and release cycles. This isolation means teams ship independently, and failures stay contained.

Resilient Workflow Orchestration

Lending is multi-step and failure-prone. With Temporal or Conductor, you orchestrate retries, handoffs, and long-running flows across services — reliably triggering scoring, KYC, offers, and signing.

Inference Co-Location

You deploy models alongside app logic. Scoring runs within the same pod, avoiding latency from cross-service calls and serving decisions in real time.

Smart Autoscaling

Services scale on borrower load, model demand, and latency thresholds. Whether it’s 100 or 100,000 requests, your system flexes without manual tuning.

You’re not just breaking the system into parts — you’re engineering speed, safety, and control into every piece.

Operationalizing ML: Real-Time Intelligence at Scale

Deploying machine learning in lending isn’t about pushing models to production — it’s about running a real-time, compliant intelligence loop. In finance, trust, traceability, and speed must be built into every stage of the ML lifecycle.

Training, Versioning, and Governance

Every model is trained on versioned data snapshots. Tools like MLflow or Metaflow track source code, parameters, datasets, and environments, ensuring full auditability. When regulators ask why a decision was made months ago, you can recreate it byte-for-byte.

CI/CD for Machine Learning

Just like code, models follow CI/CD pipelines with Argo Workflows or Tekton. Automated tests, canary deployments, and shadow mode comparisons ensure only validated models go live. If performance dips, rollback is instant via GitOps-pinned states.

Real-Time Monitoring and Drift Detection

Live models are continuously monitored for data drift, fairness metrics, and prediction decay. Tools like Prometheus or Datadog track latency and error rates. Drift detection triggers retraining pipelines, ensuring models stay aligned with current borrower behavior and macroeconomic signals.

Integrated Feedback Loops

Every borrower action — acceptance, default, prepayment — feeds back into the training loop. This creates a self-improving system where models sharpen over time, pricing gets more precise, and fraud detection becomes increasingly proactive.

Resilience and Recovery

Failures are inevitable — so your platform needs built-in recovery. Circuit breakers revert to safe defaults. Logs are queryable. Shadow testing stress-tests new models in production before full rollout. The system never forgets how a decision was made — and it always has a backup.

Machine Learning in Practice: Models and Algorithms

AI-powered lending isn’t about swapping in a model where a rule used to be — it’s about building a layered system of intelligence. From lead scoring to fraud detection, dynamic pricing to risk modeling, each model serves a specific purpose in the borrower journey. And they all work together to power smarter, faster decisions.

Lead Scoring That Goes Beyond Approval

When a borrower enters your funnel, your models predict more than just approval odds. You use multi-objective gradient boosting to estimate approval likelihood, default risk, and even the probability of drop-off before completion. Embedding-based models learn from borrower behavior over time, helping you score thin-file applicants by connecting them to lookalike patterns across your portfolio.

Real-Time Fraud Detection

Fraud moves fast — your models need to move faster. You run unsupervised models like Isolation Forests or autoencoders to detect anomalies during the application session itself. For complex patterns, you use graph-based techniques to uncover fraud rings connected through shared devices, accounts, or contact info. Sequence models like LSTMs or Transformers track behavioral changes across clicks and sessions, flagging temporal anomalies in real time.

Dynamic Pricing That Learns

Pricing is no longer a fixed APR table — it’s a dynamic optimization problem. You use reinforcement learning to continuously adjust rates and terms based on real-time borrower signals and portfolio feedback. A convex optimization layer ensures every price respects business rules and regulatory limits. Uplift modeling shows you which pricing strategies actually move conversion, while SHAP-based explainability keeps every decision auditable.

Risk Models That Adapt Over Time

Predicting default, prepayment, and loss is core to underwriting. You use survival models like Cox or DeepSurv to estimate time to default or early closure. Transformers and TCNs process transaction time series, while macro signal fusion layers bring in economic data like interest rates or inflation. For portfolio-level forecasting, you blend ARIMA, LSTM, and Prophet into ensemble models that generate stress-tested risk curves under different economic conditions.

Together, these models form a decision fabric — one that adapts to each borrower, reacts to changing signals, and helps your platform manage risk, price dynamically, and stay ahead of fraud. You’re not running one model — you’re orchestrating many, all tuned for speed, accuracy, and compliance.

Stress Testing Against Macroeconomic Shocks

Your models might perform flawlessly in stable conditions — but credit markets don’t stay stable for long. Economic downturns, interest rate spikes, and employment shocks can break assumptions that once held true. That’s why stress testing isn’t optional. It’s how you ensure your AI-driven decisions hold up under pressure.

Mitigation Strategies:

- Use synthetic stress scenarios based on historical macroeconomic shocks (e.g., 2008, 2020).

- Integrate external leading indicators (e.g., yield curve inversion, credit spread widening) into pricing and risk models.

- Maintain a parallel “crisis mode” policy tree that activates under adverse event triggers (e.g., >10% unemployment).

With stress testing built in, your platform doesn’t just optimize for the present. It prepares for the shocks that haven’t hit yet — and shows regulators, auditors, and risk teams that your AI can be trusted even when the economy can’t.

Data Architecture for Scalable AI

When you’re building an AI-native lending platform, data isn’t just infrastructure — it’s what powers every decision. You need an architecture that delivers fresh, reliable, and governed data with low latency, from ingestion to model deployment.

You build on a versioned lakehouse using Delta Lake or Apache Iceberg to unify batch and streaming data. Schema enforcement through registries like Avro or Confluent keeps data consistent across services.

Instead of organizing data by source, you structure it around modeling entities — borrower ID, loan ID — so features are instantly retrievable during inference. Column-level lineage is tracked with OpenLineage, helping you understand how upstream changes impact model inputs. Every feature query is versioned, ensuring training and production data always align.

Real-Time Streaming and Event-Driven Architecture

To power low-latency credit decisions, your platform needs to shift from batch processing to event-driven data flows. Real-time streaming ensures that borrower actions, data pulls, and scoring signals are captured and acted upon instantly — not hours later.

Kafka or Pulsar streams borrower applications, credit bureau updates, and repayment activity in real time. Apache Flink transforms these into model-ready features as the events arrive.

Event types like “application_submitted” or “income_verified” trigger downstream scoring and pricing. These pipelines are designed to maintain <200ms latency from event to decision.

Schemas evolve with forward/backward compatibility, so pipelines don’t break when data sources change. This ensures reliable inference in a continuously updating ecosystem.

Data Governance, Quality, and Compliance

In lending, bad data isn’t just a bug — it’s a liability. Your AI systems are only as strong as the trust you have in the data behind them. That means every pipeline must be governed, monitored, and compliant by design.

You define contracts for every dataset — freshness, completeness, and schema adherence. Data quality is monitored through tools like Monte Carlo or Soda, with auto-alerts on anomalies.

Access is role-based, with row- and column-level security enforced via Apache Ranger or Privacera. Sensitive fields are encrypted or tokenized. Immutable audit logs track every data touchpoint, helping you comply with GDPR, CCPA, GLBA, and OCC requirements.

Security, Compliance, and Ethical AI in Lending

Your platform encrypts data in transit (TLS 1.3) and at rest with automated key rotation. For sandbox use, you generate synthetic datasets using GANs or VAEs, maintaining structure without exposing PII.

Differential privacy techniques inject controlled noise into sensitive training features. Bias detection runs on fairness metrics like disparate impact and equal opportunity. When drift or bias is detected, remediation steps such as threshold optimization or retraining are triggered automatically.

Every prediction is explained using SHAP values and surfaced through internal dashboards. These are visible to compliance, legal, and customer service — or can be abstracted into plain-language formats for end users.

UX and Borrower-Facing Explainability

Your borrowers don’t just want a decision — they want to understand it. You build transparency into the user experience by surfacing clear, personalized explanations every time a credit decision is made.

You don’t overwhelm users with SHAP plots or technical jargon. Instead, your system translates model logic into plain-language feedback: “High credit utilization impacted your rate” or “We need more history to evaluate this application.” These explanations help build trust, especially with borrowers new to formal credit.

When a borrower disagrees, you give them a path to appeal. You offer in-app options to upload new documents, trigger manual review, or ask for reconsideration — without starting over.

You also let borrowers experiment safely. Through what-if tools, users can adjust income, loan amount, or tenure and see how those changes would affect their outcomes — powered by the same models running behind the scenes.

Case Study: Dynamic Pricing for SME Lending

A mid-market SME lender was losing ground to faster competitors. Approval rates had stagnated, unit economics were flat, and the fixed APR table couldn’t respond to shifting borrower demand or market volatility. In an industry where 1% improvement in approval lift can translate to millions in annual revenue, this lender needed a way to approve more good loans without increasing risk exposure.

By shifting to real-time AI pricing and event-driven infrastructure, they transformed both revenue and compliance performance — without increasing risk.

What We Built:

- A real-time pricing engine using reinforcement learning

- Streaming feature ingestion + automated retraining

- Embedded SHAP-based explainability for compliance

Impact:

- +38% revenue per loan

- <120ms decision latency

- Zero compliance gaps across audits

Implementation Plan for ML-Driven Lending Platforms

You roll out in three phases:

- Phase 1 (0–3 months): Launch MVP models using offline training and a basic feature store. Track training data, set up drift detection, and enforce schema contracts.

- Phase 2 (3–6 months): Shadow deploy models in production, enable automated retraining triggers, and A/B test pricing strategies.

- Phase 3 (6–12 months): Migrate all key decisions to ML, close the feedback loop, enforce versioning at every layer, and activate real-time pricing across product lines.

Throughout, measure success using LTV/CAC, approval lift, and cost per decision — not just accuracy. Involve compliance early and instrument dashboards with both technical and business KPIs.

Organizational Prerequisites for AI-Native Lending

AI-native lending isn’t just about code — it’s about ownership, trust, and accountability. You embed cross-functional pods — ML, product, compliance, engineering — around each core function. DataOps and MLOps teams maintain freshness, uptime, and lineage.

A model risk committee reviews fairness, performance, and regulatory alignment. Each model, feature pipeline, and policy is mapped to an owner via RACI. With this structure in place, AI becomes an asset that evolves safely, adapts quickly, and scales with your business.

How V2Solutions Can Help

V2Solutions helps financial institutions build AI-powered lending platforms that scale — securely, compliantly, and in real time.

What We Deliver:

- Real-time ML infrastructure: Streaming data, feature stores, and low-latency decision engines.

- Production-grade MLOps: Model registries, CI/CD, and monitoring built for finance.

- AI with governance: Bias detection, explainability, and audit-ready pipelines.

- End-to-end delivery: From architecture to deployment — we integrate with your teams and ship at velocity.

Conclusion: Shaping the Next Generation of Lending

Lending is no longer just about credit scores and static rules — it’s about delivering intelligent, real-time decisions backed by explainable, adaptive AI systems.

The institutions that lead will be those who:

- Shift from batch to real-time decisioning

- Embrace dynamic pricing over static ladders

- Prioritize governance, explainability, and resilience

Success in lending now depends on infrastructure, not intuition.

Every month spent on legacy batch systems is a month your competitors get faster, smarter, and more personalized.

Let’s build an AI-native lending platform that approves in milliseconds, prices dynamically, and scales securely — so you lead the market instead of chasing it.

Connect with V2Solutions today to start your build.

Author

-

Sukhleen Sahni